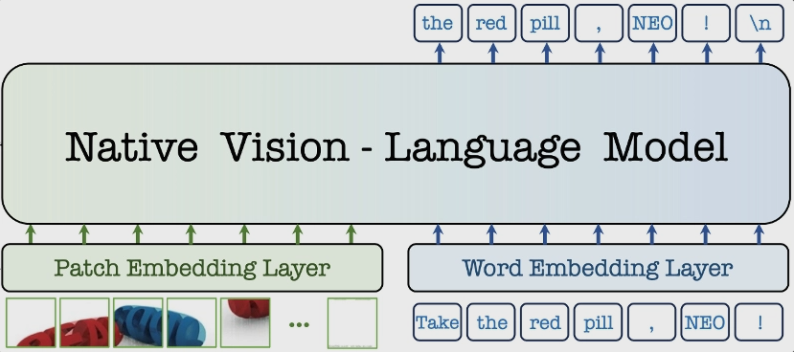

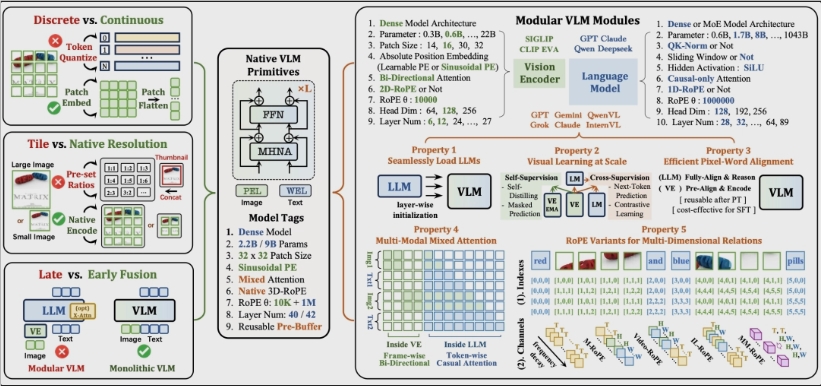

SenseTime, in collaboration with S-Lab of Nanyang Technological University, has released the industry's first native multimodal architecture, NEO, and simultaneously open-sourced two models, 2B and 9B. The new architecture abandons the traditional three-step approach of "visual encoder + projector + language model," rewriting from attention mechanisms, position encoding to semantic mapping. According to the official, the data requirement is only 1/10 of the industry average under the same performance, and for the first time achieving a continuous mapping from "pixel to Token."

According to the technical director of SenseTime, NEO directly reads pixels through native patch embedding layers, eliminating an independent image Tokenizer; the 3D rotation position encoding (Native-RoPE) expresses both text and visual spatiotemporal frequencies in the same vector space; the multi-head attention adopts a hybrid computation of "visual bidirectional + text autoregressive," improving spatial structure correlation scores by 24%. Test results show that within the 0.6B-8B parameter range, NEO achieves SOTA on ImageNet, COCO, and Kinetics-400, with edge device inference latency below 80 milliseconds.

GitHub has published model weights and training scripts, and SenseTime plans to open-source 3D perception and video understanding versions in the first quarter of next year. Industry insiders believe that NEO's "deep fusion" approach has the potential to end the "modular" era of multimodal systems, providing a new performance baseline for small terminal models.