While the global spotlight on AI is still lingering on massive models that consume trillions of parameters, a revolution in computing efficiency has quietly erupted deep within the underlying code. As the creators of the world's leading open-source inference engine, vLLM, they have officially announced their ambition today: to establish an AI infrastructure company called Inferact, aiming to create a new order in the untamed territory of AI inference . This is not only a leap in technology but also an epic declaration of how artificial intelligence can truly enter the practical stage .

The capital market has shown almost obsessive enthusiasm for this star born with a golden spoon. According to reports, Inferact secured a staggering $150 million in seed funding with an impressive valuation of about $800 million . The list of supporters behind this huge investment is a microcosm of global top-tier power: led by venture capital giants Andreessen Horowitz and Spark Capital, renowned institutions such as Sequoia Capital, Altimeter Capital, Rho Capital, and ZhenFund have all joined, collectively injecting strong fuel into this future inference engine .

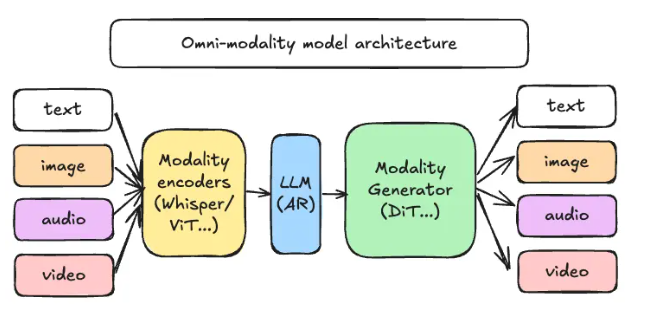

As a favorite of the open-source community, vLLM has already left an indelible mark in the hearts of developers. It has supported over 500 model architectures and run smoothly on more than 200 hardware accelerators, successfully taking on the global scale inference tasks . However, Inferact's goals are even more grand and specific: they aim to push vLLM to a leading global position, completely breaking the shackles of expensive inference costs, and using extreme speed to let AI's wisdom flow into every computing node .

In the vast development process of AI, if model training is a long-term and closed-door effort, then inference is a real combat mission that pierces through the darkness . As large-scale models are fully deployed, the consumption of computing resources during the inference phase has grown like a snowball, becoming the life-or-death factor determining commercial success . Inferact's emergence marks that the industry's direction has shifted from expensive training grounds to efficient deployment fields. This not only verifies the immense potential of open-source technology in the commercial landscape but also signals that the competition in AI infrastructure has entered its second half, focusing on achieving maximum efficiency .