Google officially released and open-sourced the new edge-side multimodal large model Gemma3n on Friday morning. This breakthrough product brings powerful multimodal capabilities, previously only available in the cloud, to edge devices such as smartphones, tablets, and laptops.

Core Features: Small Size, Big Capability

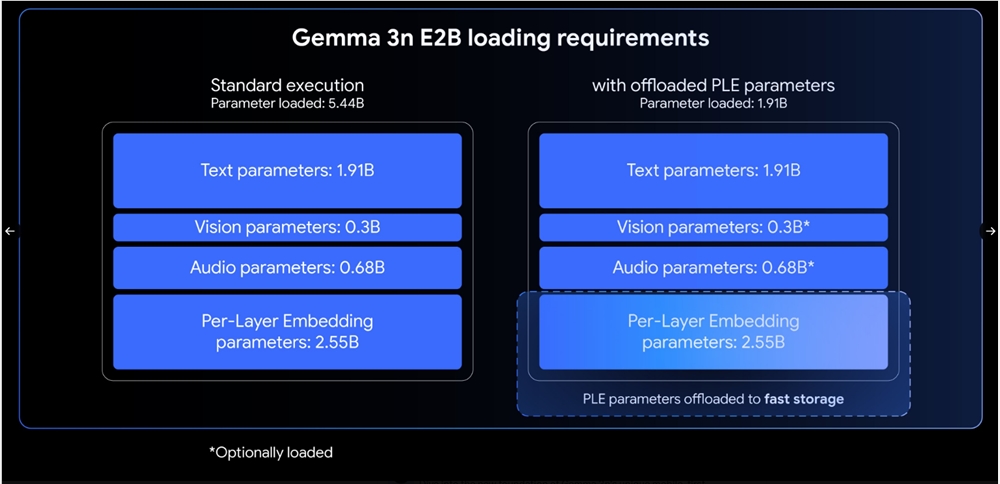

Gemma3n offers two versions, E2B and E4B. Although their original parameter counts are 5B and 8B respectively, through architectural innovation, their memory usage is equivalent to traditional 2B and 4B models, requiring only 2GB and 3GB of memory to run. The model natively supports multimodal input processing of images, audio, video, and text, and supports multimodal understanding in 140 text languages and 35 languages.

Notably, the E4B version scored over 1300 in the LMArena evaluation, becoming the first model under 10 billion parameters to reach this benchmark. It has achieved significant improvements in multilingual support, math, coding, and reasoning capabilities.

Technological Innovation: Four Breakthrough Architectures

MatFormer Architecture: Gemma3n adopts a new Matryoshka Transformer architecture, which allows a single model to include multiple sizes like Russian nesting dolls. When training the E4B model, the E2B submodel is optimized simultaneously, offering developers flexible performance choices. With Mix-n-Match technology, users can create custom-sized models between E2B and E4B.

Per-Layer Embedding (PLE) Technology: This innovation allows most parameters to be loaded and computed on the CPU, with only the core Transformer weights needing to be stored in accelerator memory, significantly improving memory efficiency without affecting model quality.

KV Cache Sharing: Optimized for long content processing, the key-value cache sharing technology doubles the prefilling performance compared to Gemma34B, significantly speeding up the generation time of the first token in long sequences.

Advanced Encoder: For audio, it uses an encoder based on the Universal Speech Model (USM), supporting automatic speech recognition and voice translation, capable of handling audio clips up to 30 seconds long. For vision, it features a MobileNet-V5-300M encoder, supporting various input resolutions, achieving a processing speed of 60 frames per second on Google Pixel.

Practical Features and Application Scenarios

Gemma3n performs exceptionally well in voice translation, especially for converting between English and Spanish, French, Italian, and Portuguese. The visual encoder MobileNet-V5 achieves 13 times faster performance than the baseline model through advanced distillation technology, reducing parameters by 46% and memory usage by four times, while maintaining higher accuracy.

Open Source Ecosystem and Development Prospects

Google has open-sourced the model and weights on the Hugging Face platform, providing detailed documentation and development guides. Since the release of the first Gemma model last year, the series has been downloaded over 160 million times, showing a strong developer ecosystem.

The release of Gemma3n marks a new stage in the development of edge AI, bringing cloud-level multimodal capabilities down to user devices, opening up endless possibilities for mobile applications and smart hardware.