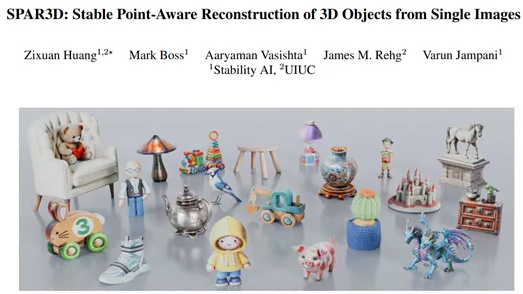

In the field of computer vision, single-image 3D reconstruction technology has become a highly researched area due to its ability to recover the shape and structure of 3D objects from 2D images. Recently, the renowned open-source large model platform Stability-AI launched an innovative model called SPAR3D, achieving a reconstruction speed of 0.7 seconds, which has brought significant changes to the industry.

Single-image 3D reconstruction faces many challenges, and the main technical approaches are regression-based methods and generative modeling methods. Regression-based methods are efficient in inferring visible surfaces but often suffer from inaccurate surface and texture estimation in occluded regions. On the other hand, generative methods can better handle uncertain areas, but they have high computational costs and their generated results may not align well with visible surfaces.

SPAR3D effectively overcomes the limitations of both technologies by combining their advantages, significantly improving the speed and accuracy of reconstruction.

Architecture of SPAR3D: Efficient Point Sampling and Meshing

The architecture of SPAR3D consists of two main stages: the point sampling stage and the meshing stage.

Point Sampling Stage: The core of this stage is the point diffusion model, which generates sparse point clouds containing XYZ coordinates and RGB color information based on the input 2D image. It uses the DDPM (Denoising Diffusion Probabilistic Models) framework, which learns how to recover noise from noisy point clouds by adding Gaussian noise and using a denoiser in the reverse process. During inference, the DDIM (Denoising Diffusion Implicit Models) sampler generates point cloud samples, and classifier-free guidance (CFG) is used to improve the fidelity of the sampling.

Meshing Stage: The goal of this stage is to generate textured 3D meshes from the input image and point cloud. SPAR3D employs a large-scale triplane Transformer that can predict triplane features from the image and point cloud, thereby estimating the object's geometry, texture, and lighting. During training, a differentiable renderer is used with rendering loss to supervise the model, ensuring the realism and quality of the generated results.

Significant Performance: Exceeding Traditional Methods

In tests on the GSO and OmniObject3D datasets, SPAR3D significantly outperformed traditional regression and generative baseline methods across multiple evaluation metrics. For example, in the GSO dataset, SPAR3D achieved a CD (Chamfer Distance) value of 0.120, FS@0.1 of 0.584, and a PSNR (Peak Signal-to-Noise Ratio) of 18.6, while other methods performed relatively poorly. In the OmniObject3D dataset, SPAR3D also demonstrated excellent performance, with a CD value of 0.122, FS@0.1 of 0.587, and a PSNR of 17.9.

These results fully demonstrate SPAR3D's outstanding performance in terms of geometric shape and texture quality, showcasing its potential for practical applications.

Conclusion: The Future of Open Source Technology

As technology continues to advance and application scenarios expand, SPAR3D is undoubtedly set to play an important role in the fields of computer vision and 3D reconstruction. For developers and researchers, the open-source nature of SPAR3D means more opportunities for innovation and application.

Open source address: https://github.com/Stability-AI/stable-point-aware-3d

Huggingface: https://huggingface.co/stabilityai/stable-point-aware-3d