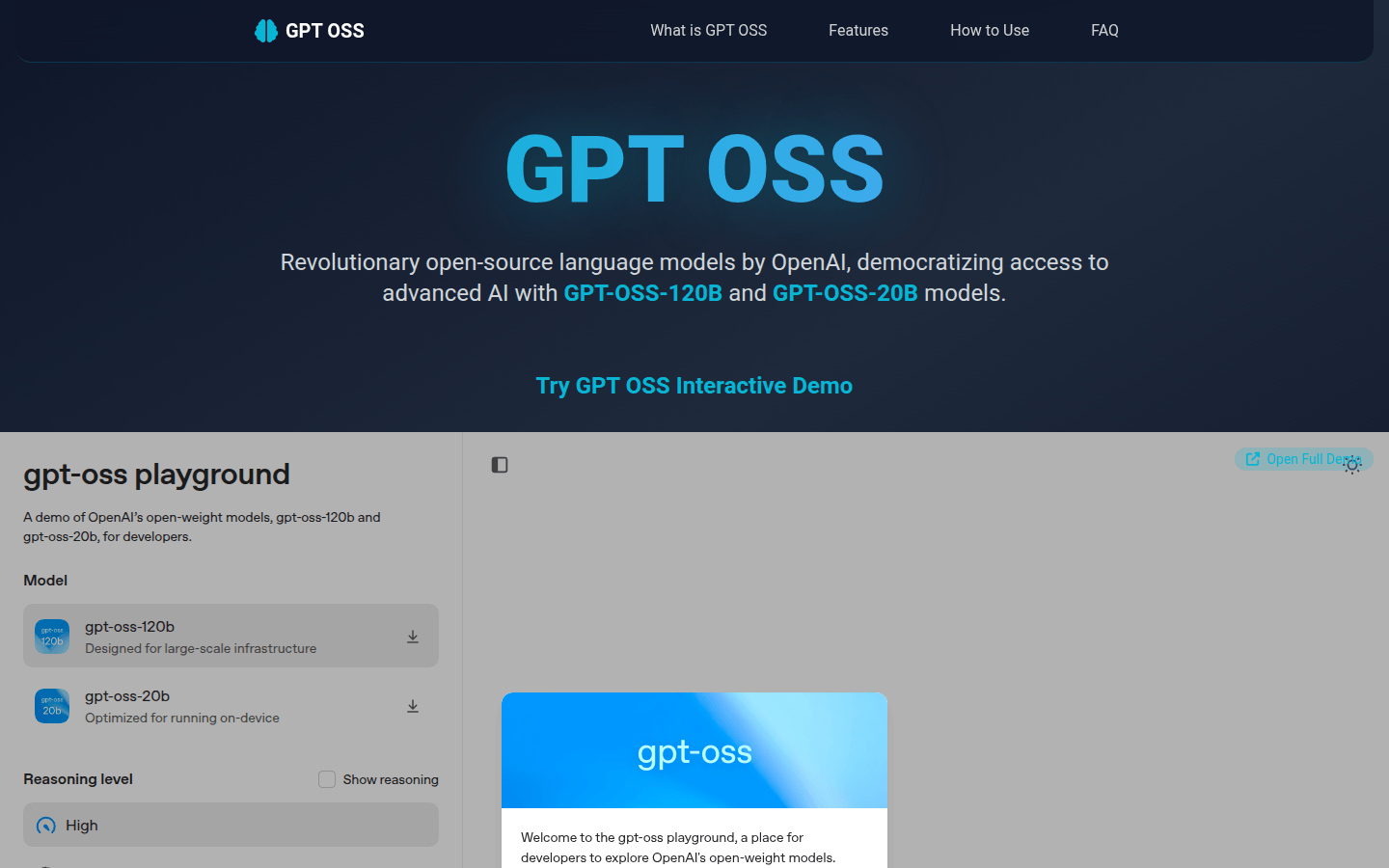

This is a quantized version of the kldzj/gpt-oss-120b-heretic model, quantized using llamacpp. It offers a variety of quantization types, including special formats such as BF16, Q8_0, and MXFP4_MOE, significantly improving the model's operating efficiency.

Natural Language Processing Gguf

Gguf