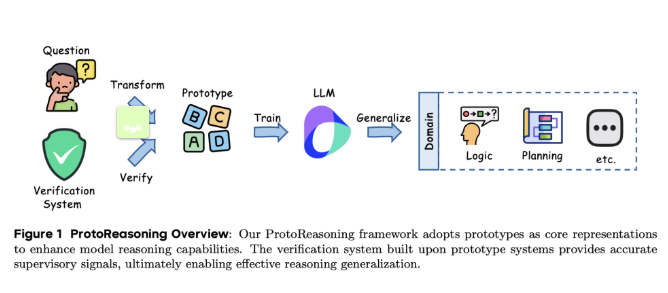

Recently, ByteDance's research team and the Shanghai Jiao Tong University team jointly introduced a new framework called ProtoReasoning, aimed at enhancing the reasoning capabilities of large language models (LLMs) through logical prototypes. This framework uses structured prototype representations, such as Prolog and PDDL, to advance cross-domain reasoning.

In recent years, large language models have made significant breakthroughs in cross-domain reasoning, especially with the application of long-chain reasoning techniques. Research has found that these models demonstrate excellent performance in tasks such as mathematics and programming, as well as in unrelated fields like logical puzzles and creative writing. However, the reasons behind this flexibility are not yet fully clear. One possible explanation is that these models have learned core reasoning patterns, i.e., abstract reasoning prototypes across domains, which can help the models better handle various types of problems.

The ProtoReasoning framework enhances the model's reasoning capabilities by using structured prototype representations, including two main modules: the prototype builder and the validation system. The prototype builder converts natural language questions into formal representations, while the validation system checks the correctness of the solutions. In the application of Prolog, researchers designed a four-step pipeline to generate diverse logic problems and validated them using SWI-Prolog. For planning tasks, the research team used PDDL to build plan generation, completion, and reordering tasks, and verified their correctness using the VAL validator.

In the evaluation of the ProtoReasoning framework, an expert model with 150 billion parameters (of which 15 billion were active parameters) was used, and it was trained on carefully selected high-quality Prolog and PDDL samples. The results showed significant improvements in logical reasoning, planning, and multiple benchmark tests. In particular, comparison experiments with the natural language version showed that training based on Prolog performed nearly as well as the natural language version in logical reasoning, further verifying the effectiveness of structured prototype training.

The ProtoReasoning framework demonstrates the important role of abstract reasoning prototypes in promoting cross-domain knowledge transfer in large language models. Although the experimental results are encouraging, further theoretical exploration is needed regarding the specific properties of reasoning prototypes. Future research will focus on mathematically formalizing these concepts and validating them using open-source models and datasets.

Paper: https://arxiv.org/abs/2506.15211

Key points:

🌟 The ProtoReasoning framework enhances the logical reasoning capabilities of large language models using Prolog and PDDL.

🧠 Through structured prototype representations, the model shows significant improvements in logical reasoning, planning, and general problem-solving tasks.

🔍 Future research will explore the theoretical foundations of reasoning prototypes and validate the effectiveness of the experimental results.