A French artificial intelligence startup, Mistral AI, announced on Wednesday its full entry into the field of artificial intelligence infrastructure, positioning itself as a strong response from Europe to American cloud computing giants. At the same time, the company also launched a new inference model that can rival the most advanced systems from OpenAI.

This Paris-based company released Mistral Compute, an integrated AI infrastructure platform built in collaboration with NVIDIA (Nvidia), aimed at providing European businesses and governments with an alternative solution to摆脱 reliance on American cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud. This move marks a significant strategic shift for Mistral AI, transitioning from solely developing AI models to controlling the entire technology stack.

Arthur Mensch, CEO and co-founder of Mistral AI, stated: "Entering the field of artificial intelligence infrastructure represents a transformative step for Mistral AI because it allows us to reach a key vertical in the AI value chain. This shift means we have a responsibility to ensure our solutions not only drive innovation and the popularization of AI but also maintain Europe's technological sovereignty and contribute to its leadership in sustainable development."

How Mistral Built Its Reasoning Model That Can Think in Any Language

In addition to the announcement of infrastructure, Mistral also released its Magistral series of reasoning models—these AI systems are capable of step-by-step logical thinking, similar to OpenAI's o1 model and China's DeepSeek R1. However, Guillaume Lample, chief scientist of Mistral, said that the company's approach differs from competitors in key ways.

In an exclusive interview, Lample revealed: "We started everything from scratch, mainly because we wanted to learn our existing expertise, such as flexibility in our work. In fact, we achieved very efficient levels in a stronger online reinforcement learning process." Unlike competitors who often hide their reasoning processes, Mistral's models display the complete thought chain to users, and crucially, they use the user's native language instead of default English. Lample explained: "We showed the complete thought chain in the user's own language so that they could truly read and see if it made sense."

The company released two versions: the open-source model Magistral Small with 24 billion parameters; and the more powerful proprietary system Magistral Medium, accessible via the Mistral API.

"Superpowers" Gained in AI Model Training

These models demonstrated surprising capabilities during training. Most notably, despite the training process focusing solely on text-based math and coding problems, Magistral Medium retained multimodal reasoning capabilities—namely, the ability to analyze images.

Lample stated: "We realized this wasn't entirely accidental, but rather something we absolutely didn't expect. If you reinsert the initial visual encoder at the end of reinforcement learning training, you suddenly discover that the model can reason about images."

These models also gained complex function calling capabilities, automatically executing multi-step internet searches and code execution to answer complex queries. Lample explained that the model would search the web like a human, process the results, and even search again if needed. This behavior naturally formed without special training, which left the team "very surprised."

Engineering Breakthrough: Training Speed Far Exceeds Competitors

Mistral's technical team overcame major engineering challenges, creating what Lample called a breakthrough in training infrastructure. The company developed a set of "online reinforcement learning" systems that allow AI models to continuously improve while generating responses, without relying on existing training data.

The key innovation was synchronizing model updates in real-time across hundreds of graphics processing units (GPUs). Lample explained: "What we did was find a way to migrate the model through just GPUs." This allowed the system to update model weights between different GPU clusters in seconds instead of the usual hours.

Lample noted: "No open-source infrastructure does this as effectively. Typically, there are many similar open-source attempts to do this, but they are extremely slow. Here, we paid great attention to efficiency." It turns out that the training process is faster and cheaper than traditional pre-training, and Lample said it could be completed in less than a week.

NVIDIA Pledges 18,000 Chips for European AI Independence

The Mistral Compute platform will run on 18,000 of NVIDIA's latest Grace Blackwell chips, initially deployed in a data center in Essonne, France, with plans to expand throughout Europe. NVIDIA CEO Jensen Huang stated that this partnership is critical for European technological independence.

Huang stated in a joint statement in Paris: "Every country should build its own artificial intelligence within its borders to serve its people. Through Mistral AI, we are developing models and AI factories as autonomous platforms for businesses across Europe, helping companies expand intelligence in various industries." Huang predicted that Europe's AI computing power will grow tenfold in the next two years, with over 20 "AI factories" planned across the continent.

This partnership extends beyond infrastructure to include NVIDIA collaborating with other European AI companies and search company Perplexity to develop reasoning models in various European languages, for which training data is typically limited.

Solving AI's Environmental and Sovereignty Issues

Mistral Compute addresses two major issues in AI development: environmental impact and data sovereignty. The platform ensures that European clients can keep their information within the EU and under European jurisdiction.

The company collaborated with France's National Agency for Ecological Transition and leading climate consultancy Carbone4 to assess and minimize the carbon footprint of its AI models throughout their lifecycle. Mistral plans to power its data centers with decarbonized energy and stated, "By choosing Europe as our factory location, we benefit from a large amount of decarbonized energy."

Speed Advantage Gives Mistral's Reasoning Models Practical Edge

Early tests indicate that Mistral's reasoning models not only perform exceptionally well but also address a common issue with existing systems—speed. Current inference models from OpenAI and others may take minutes to respond to complex queries, limiting their practical application.

Lample noted: "One thing people generally dislike about these reasoning models is that although they are intelligent, they sometimes take a lot of time. Here, you actually see output in just a few seconds, sometimes even less than five seconds, sometimes even shorter. This changes the experience." The speed advantage is crucial for enterprise adoption, as waiting for AI responses for several minutes creates workflow bottlenecks.

Mistral Infrastructure Investment's Profound Impact on Global AI Competition

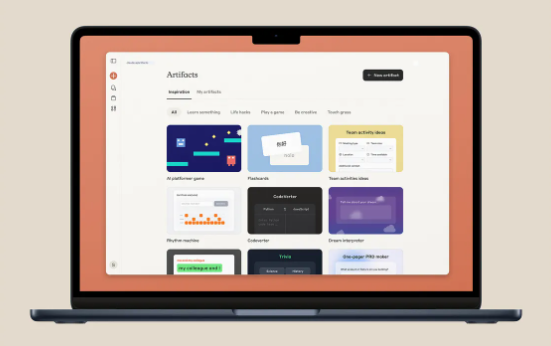

Mistral's entry into the infrastructure sector positions it directly against tech giants dominating the cloud computing market. The company offers a complete vertically integrated solution—from hardware infrastructure to AI models to software services. This includes Mistral AI Studio for developers, Le Chat for boosting corporate productivity, and Mistral Code for programming assistance.

Industry analysts view Mistral's strategy as part of a broader regional trend in AI development. Huang stated: "Europe urgently needs to expand its AI infrastructure to maintain global competitiveness." This aligns with concerns among European policymakers.

This announcement comes as European governments increasingly worry about their dependence on American tech companies in key AI infrastructure. The EU has committed 20 billion euros to build AI "super factories" across the continent, and Mistral's partnership with NVIDIA could accelerate these plans.

Mistral's announcement of infrastructure and model features indicates that the company is committed to becoming a comprehensive AI platform, not just a model provider. With support from Microsoft and other investors, the company has raised over $1 billion and will continue seeking additional funds to support its expanding business scope.

Lample sees greater potential in reasoning models in the future. He said: "When I observe internal progress, I think the model's accuracy improves by about 5% each week on some benchmarks, and this has been going on for six weeks. So it is improving rapidly, and there are countless small ideas that can enhance performance." Whether Europe's efforts to challenge American dominance in artificial intelligence can succeed ultimately depends on whether customers sufficiently value sovereignty and sustainability enough to consider switching suppliers. For now, they still have options.