In the field of artificial intelligence, reward models are a critical component for aligning large language models (LLMs) with human feedback, but existing models face the "reward hacking" problem.

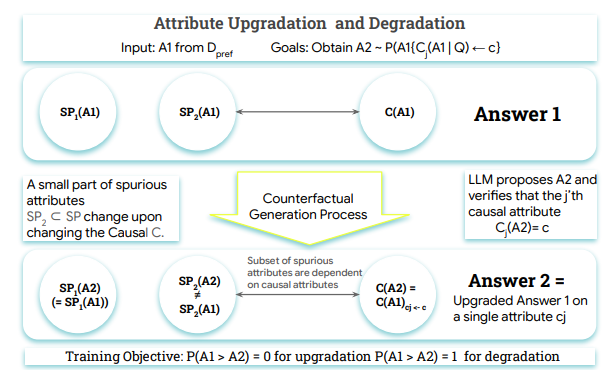

These models often focus on superficial features, such as the length or format of responses, rather than identifying genuine quality metrics, such as factual accuracy and relevance. The root cause lies in the standard training objectives failing to distinguish between spurious associations and true causal drivers present in the training data. This failure leads to fragile reward models (RMs), which generate misaligned policies. To address this issue, a new approach is needed that utilizes causal understanding to train RMs, making them sensitive to causal quality attributes and robust against various spurious cues.

Existing reward model methods attempt to solve the reward hacking problem in standard RLHF systems that rely on Bradley-Terry or pairwise ranking approaches, including architectural modifications, policy-level adjustments, and data-centric methods involving ensembles or consistency checks. Recent causal heuristics use MMD regularization to target pre-specified spurious factors, or correct rewriting to estimate causal effects. However, these methods only target pre-determined spurious factors and fail to capture unknown associations. Although enhanced strategies remain relatively crude, and evaluation-centered approaches do not provide robust training mechanisms for reward models to handle diverse spurious variations.

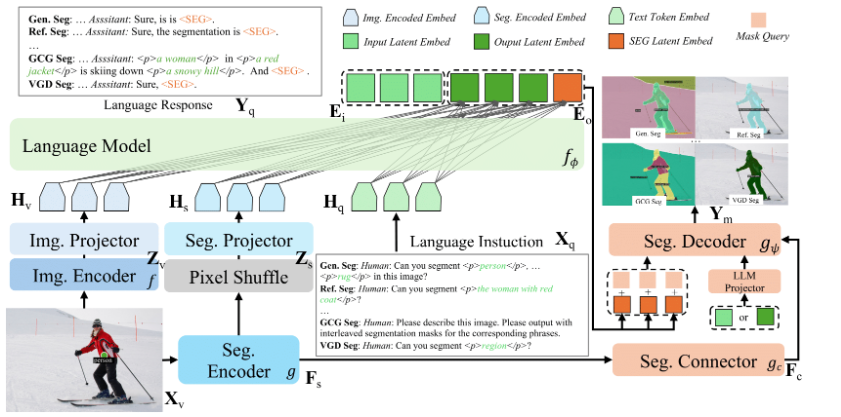

To address these challenges, researchers from Google DeepMind, McGill University, and MILA - Quebec AI Institute proposed Crome (Causal Robust Reward Modeling). The Crome framework is built upon an explicit causal model of answer generation, training RMs by adding a preference dataset with targeted, large language model-generated counterfactual examples, thereby distinguishing real quality drivers from surface cues. Additionally, Crome creates two types of synthetic training pairs: causal augmentations and neutral augmentations, enhancing the model's robustness and maximizing the accuracy of the reward benchmark.

Crome's operation is divided into two main stages: generating attribute-aware counterfactual data based on the causal model, and training the reward model through a specialized loss on the combined data. When evaluating performance, researchers used various base LLMs, including Gemma-2-9B-IT and Qwen2.5-7B, achieving significant performance improvements.

Crome performs exceptionally well on multiple benchmarks, particularly showing notable progress in safety and reasoning capabilities. Additionally, it performs well on WildGuardTest, reducing the attack success rate against harmful prompts while maintaining a similar rejection rate for benign prompts.

In the future, the research direction of Crome will focus on causal data augmentation, promoting synthetic data generation, and providing new possibilities for pre-trained model training.

Paper: https://arxiv.org/abs/2506.16507

Key Points:

🌟 The Crome framework was proposed by institutions such as Google DeepMind, aiming to enhance the robustness of reward models.

📈 Crome significantly improves the model's performance in multiple tasks through causal augmentations and neutral augmentations strategies.

🔒 Crome performs excellently in security tests, reducing the attack success rate and improving the reliability of the model.