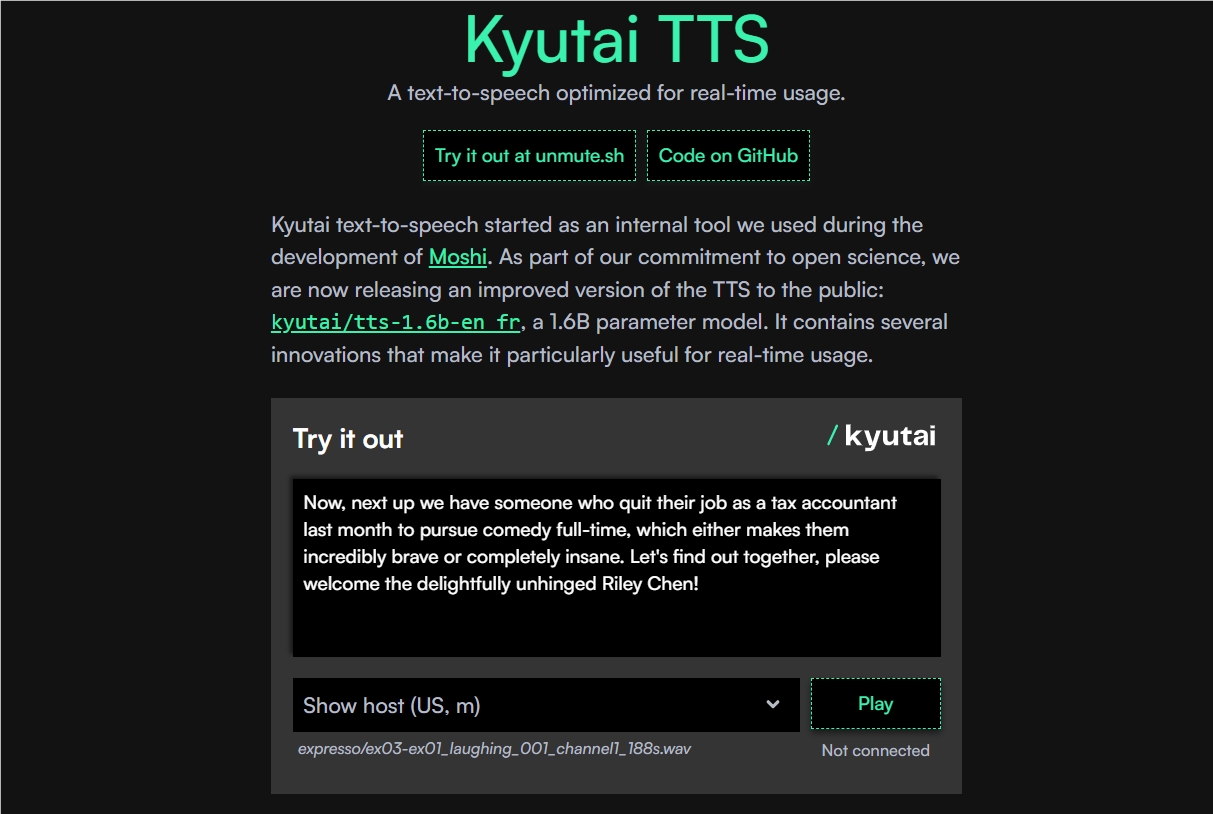

Recently, the French AI laboratory Kyutai announced the open source release of its new text-to-speech model, Kyutai TTS, offering a high-performance and low-latency speech synthesis solution for developers and researchers worldwide. This breakthrough not only promotes the development of open-source AI technology but also opens up new possibilities for multilingual speech interaction applications. AIbase provides an exclusive analysis of this technological highlight and its potential impact.

Ultra-low latency, new real-time interaction experience

Kyutai TTS has become a focus in the industry with its outstanding performance. The model supports streaming text transmission, generating natural and smooth speech in an extremely short time. Thanks to the powerful L40S GPU support, Kyutai TTS can process up to 32 requests simultaneously, with a latency as low as 350 milliseconds, providing solid technical support for real-time speech interaction. Whether it is virtual assistants, real-time subtitle generation, or online education platforms, this ultra-low latency feature will significantly enhance user experience.

High-precision speech output, details revealed

Kyutai TTS not only excels in speed, but its speech generation accuracy is also remarkable. The model has a word error rate (WER) of 2.82 for English and 3.29 for French, demonstrating extremely high speech accuracy. In addition, its speaker similarity reaches 77.1% for English and 78.7% for French, making the generated speech not only natural and smooth, but also highly reproducing the voice characteristics of the target speaker. More surprisingly, Kyutai TTS can output exact word timestamps, providing strong support for scenarios requiring precise synchronization, such as subtitle generation or dubbing.

Open source address: https://kyutai.org/next/tts

Multilingual support, wide range of application scenarios

Currently, Kyutai TTS supports English and French, and can process long articles for speech generation. This makes it have broad application potential in fields such as education, media production, and voice navigation. For example, in the education field, Kyutai TTS can provide high-quality text reading services for visually impaired people; in the media industry, its low latency and high-fidelity speech can be used to quickly generate podcast or audiobook content. In the future, Kyutai Laboratory plans to further expand language support through community contributions, enhancing the model's global applicability.

Open source empowerment, community-driven innovation

As a fully open-source model, Kyutai TTS is released under the CC-BY-4.0 license, allowing developers to freely use, modify, and distribute it. This open strategy not only lowers the technical usage threshold but also provides valuable resources for the global AI community. Kyutai Laboratory calls on community users to donate voice data to help the model add more voice styles and language support, jointly promoting the advancement of speech synthesis technology.

Future Outlook: A New Milestone in AI Voice Technology

The release of Kyutai TTS marks a new height in open-source AI voice technology. Its innovative streaming processing architecture, ultra-low latency performance, and high-fidelity speech output provide developers with powerful tools, promoting the popularization and innovation of voice interaction technology. AIbase believes that with more developers and researchers joining the ecosystem of Kyutai TTS, this model is expected to trigger a new wave of AI voice applications around the world.