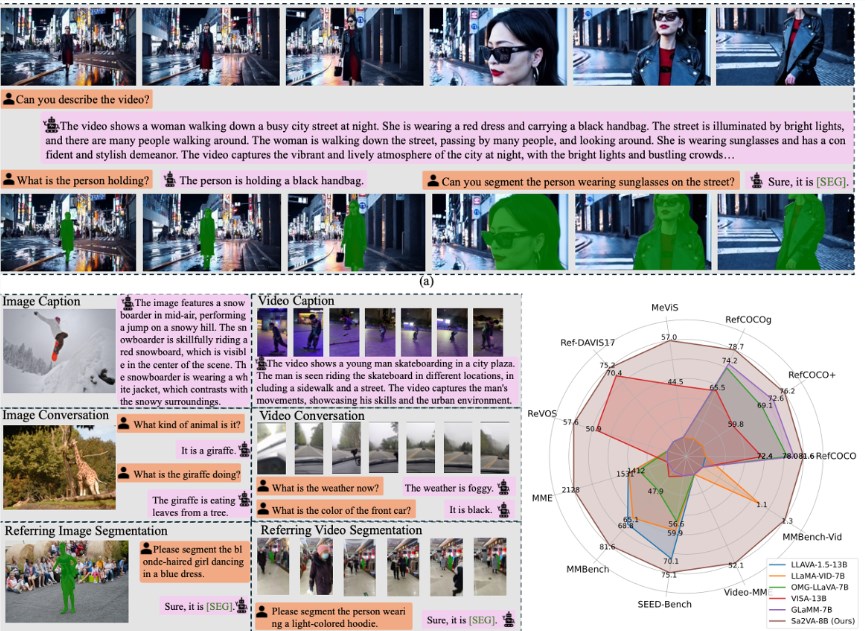

Recently, the Tongyi Lab of Alibaba and the School of Computer Science at Nankai University jointly released an innovative video large model compression method - LLaVA-Scissor. The emergence of this technology aims to address a series of challenges in video model processing, especially the issues of inference speed and scalability caused by a high number of tokens when processing video frames with traditional methods.

Video models need to encode each frame individually, which leads to a sharp increase in the number of tokens. Although traditional token compression methods such as FastV, VisionZip, and PLLaVA have achieved certain results in the image field, they expose problems such as insufficient semantic coverage and temporal redundancy in video understanding. To address this, LLaVA-Scissor adopts a graph theory-based algorithm - the SCC method, which can effectively identify different semantic regions in the token set.

The SCC method calculates the similarity between tokens, constructs a similarity graph, and identifies connected components in the graph. Each token in a connected component can be replaced by a representative token, thereby significantly reducing the number of tokens. To improve processing efficiency, LLaVA-Scissor adopts a two-step spatiotemporal compression strategy, performing spatial compression and temporal compression separately. In spatial compression, semantic regions are identified for each frame, while temporal compression removes redundant information across frames, ensuring that the final generated tokens can efficiently represent the entire video.

In experimental validation, LLaVA-Scissor has shown outstanding performance in multiple video understanding benchmark tests, especially showing significant advantages under low token retention rates. For example, in the video question-answering benchmark test, LLaVA-Scissor is comparable to the original model at a 50% token retention rate, and outperforms other methods at 35% and 10% retention rates. In long video understanding tests, the method also demonstrated good performance, achieving an accuracy of 57.94% on the EgoSchema dataset at a 35% token retention rate.

This innovative compression technology not only improves the efficiency of video processing but also opens up new directions for the development of future video understanding and processing. The release of LLaVA-Scissor is undoubtedly expected to have a positive impact in the field of video artificial intelligence.

Key Points:

🌟 LLaVA-Scissor is an innovative video large model compression technology developed jointly by Alibaba and Nankai University, aimed at solving the problem of a sharp increase in token numbers in traditional methods.

🔍 The SCC method calculates token similarity, builds a graph, and identifies connected components, effectively reducing the number of tokens while preserving key semantic information.

🏆 LLaVA-Scissor has shown excellent performance in multiple video understanding benchmark tests, especially demonstrating significant performance advantages at low token retention rates.