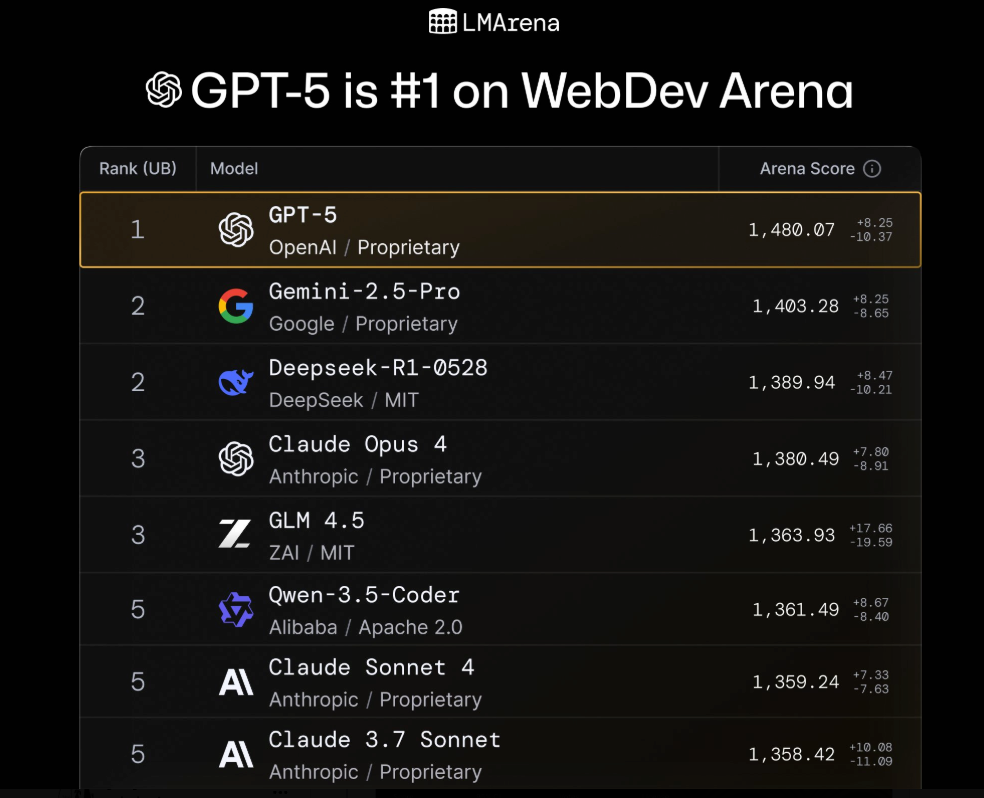

AIBase Message, OpenAI's GPT-5 has made history on the authoritative AI model evaluation platform LMArena, topping the ranking list with the highest Arena score, surpassing strong competitors such as Google Gemini 2.5 Pro and Anthropic Claude Opus4, establishing its leading position in the current competition of large AI models.

Completely Leading: Dominate the Top in Six Core Areas

GPT-5's outstanding performance on LMArena is not a single-dimensional breakthrough, but a comprehensive leadership in multiple key evaluation areas. According to the latest ranking data, GPT-5 ranks first in the following six core capability dimensions:

Handling Complex Prompts: It performs best in processing complex, ambiguous, or multi-layered user instructions, demonstrating strong understanding and reasoning capabilities

Programming Ability: It outperforms all competitors in code generation, debugging, and architectural design tasks

Mathematical Reasoning: It reaches a new level of performance in solving mathematical problems, logical deduction, and quantitative analysis

Creative Ability: It shows excellent imagination and originality in creative writing, content generation, and artistic creation

Long Query Processing: It maintains consistent high-quality output when handling long texts, complex conversations, and multi-turn interactions

Visual Tasks: It achieves breakthrough progress in image understanding, visual reasoning, and multimodal interaction

This comprehensive advantage indicates that GPT-5 not only excels in specific tasks, but more importantly, it has achieved an overall improvement in general intelligent capabilities.

Technical Accumulation Behind the "Summit" Code Name

Notably, GPT-5 was tested on the LMArena platform under the code name "Summit" before its official release. This code name appropriately foreshadowed its final ranking performance - reaching the peak.

During the testing phase, "Summit" already demonstrated superior capabilities in text processing, web development, and visual tasks, laying the foundation for its comprehensive leadership after the official release. This transition from a code name to an official name also reflects OpenAI's strict control over product quality and full confidence in market performance.

Re-shaping the Competitive Landscape

GPT-5's top ranking has had a significant impact on the competitive landscape in the AI industry. Previously strong competitors in various evaluations include:

Google Gemini 2.5 Pro: Once led in multimodal tasks, but was surpassed by GPT-5 in overall scores

Anthropic Claude Opus4: Has always been a strong competitor in safety and reasoning abilities, now ranks in the second tier

Other mainstream models: Including AI models from companies such as Meta and Amazon have seen their positions on the ranking list affected by the rise of GPT-5