Xiaomi's large model team announced the open source of the latest multimodal large model Xiaomi MiMo-VL-7B-2508, which includes RL and SFT versions.

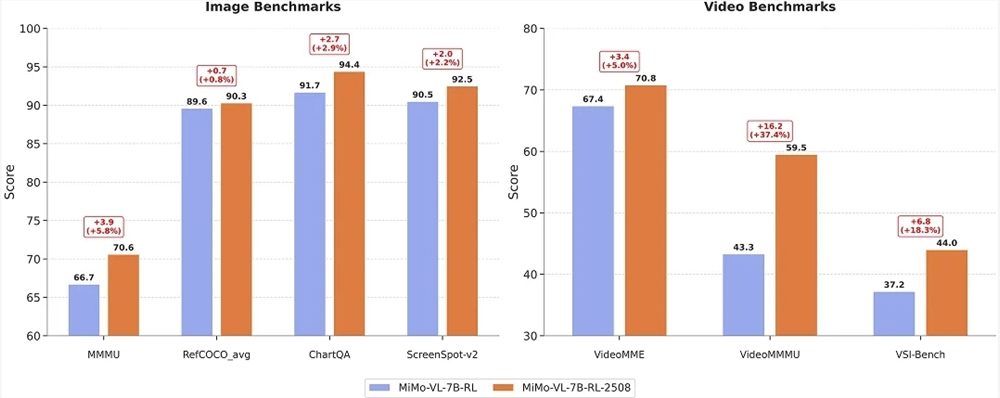

Official data shows that the new model has set new records in four core capabilities: subject reasoning, document understanding, graphical interface positioning, and video understanding. Among them, the MMMU benchmark has broken through 70 for the first time, ChartQA has reached 94.4, ScreenSpot-v2 has reached 92.5, and VideoMME has improved to 70.8.

This iteration improved the stability of reinforcement learning and the supervised fine-tuning process, allowing the model's internal VLM Arena score to jump from 1093.9 to 1131.2.

Notably, users can switch between "thinking" and "non-thinking" modes by using the "/no_think" instruction when asking questions: the former displays the entire reasoning chain and achieves a 100% control success rate, while the latter generates answers directly, with faster response times and a 99.84% success rate.

MiMo-VL-7B-RL-2508

Recommended for users to experience under most circumstances.

Open source address: https://huggingface.co/XiaomiMiMo/MiMo-VL-7B-RL-2508

MiMo-VL-7B-SFT-2508

Users can perform SFT and RL based on this model according to their actual needs. Compared with the previous version of the SFT model, this model has higher RL stability.

Open source address: https://huggingface.co/XiaomiMiMo/MiMo-VL-7B-SFT-2508