In the field of robotics, making artificial intelligence truly "understand" the 3D world has always been a challenging problem. Traditional visual-language models (VLA) mostly rely on 2D image and text data for training, making it difficult to understand 3D space in real environments. However, a research team from Shanghai Jiao Tong University and the University of Cambridge recently proposed a novel enhanced visual-language action model called Evo-0. By injecting 3D geometric priors in a lightweight way, this model significantly improves a robot's spatial understanding capabilities in complex tasks.

The innovation of the Evo-0 model lies in its use of a visual geometric base model (VGGT) to extract 3D structural information from multi-view RGB images and combine these with existing visual-language models. This approach not only avoids the need for additional sensors or explicit depth input but also significantly enhances spatial perception. In RLBench simulation experiments, Evo-0 achieved a 15% higher success rate than the baseline model pi0 on five tasks requiring fine manipulation, and improved performance by 31% on the open VLA (openvla-oft).

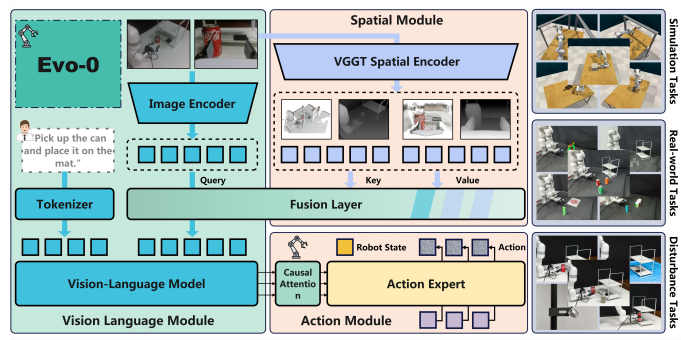

Specifically, Evo-0 uses VGGT as a spatial encoder and introduces t3^D tokens extracted through VGGT, which contain geometric information such as depth context and spatial relationships. Through a cross-attention fusion module, the model can effectively combine extracted 2D visual tokens with 3D tokens, enhancing the ability to understand spatial layouts and object relationships. This method improves flexibility and deployment convenience while maintaining training efficiency.

In real-world experiments, Evo-0 performed well in handling complex spatial tasks, including centering targets, inserting into holes, and dense grasping, surpassing the baseline model with an average success rate improvement of 28.88%. Especially in understanding and controlling complex spatial relationships, Evo-0 shows significant advantages.

In summary, Evo-0 provides a new feasible path for future general robot strategies through clever integration of spatial information. This research achievement has not only attracted widespread attention in the academic community but also brought new possibilities for practical applications in the field of robotics.