Zhipu announced the release and open-sourcing of GLM-4.5V, the best-performing open-source visual reasoning model at the 100B scale globally. This marks another important exploratory achievement for the company on its path toward Artificial General Intelligence (AGI). The model is now open-sourced simultaneously on the ModelScope community and Hugging Face. With a total parameter count of 106B and an activated parameter count of 12B, it represents a new milestone in multimodal reasoning technology.

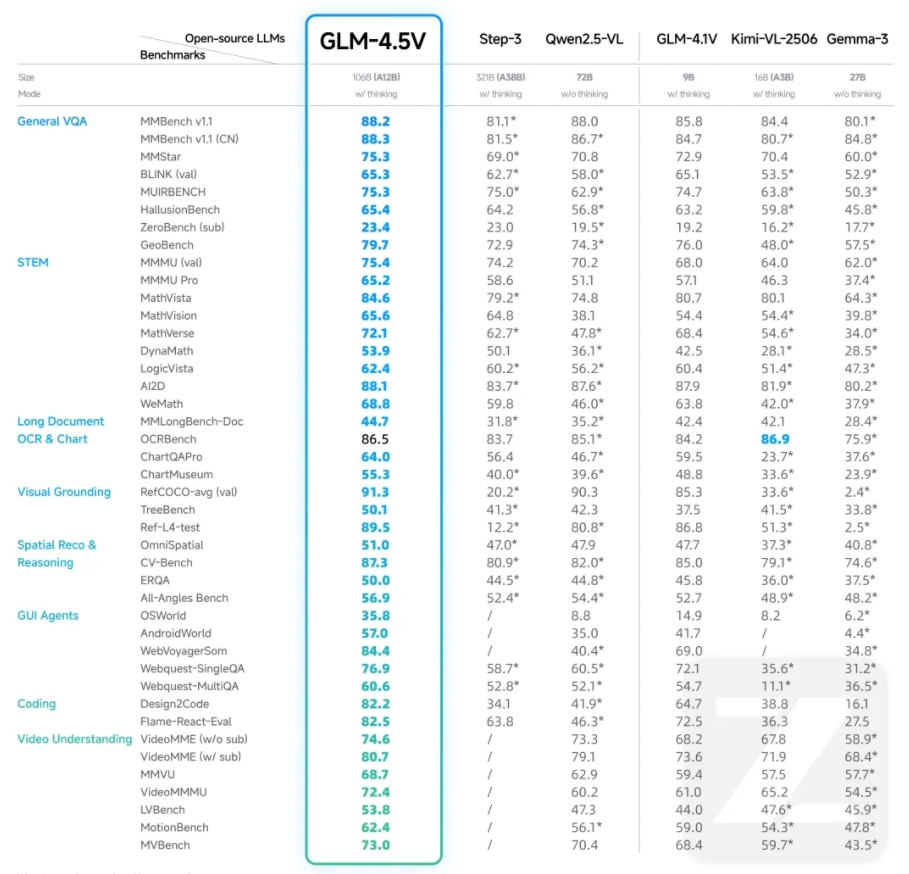

GLM-4.5V is based on Zhipu's new generation flagship text foundation model, GLM-4.5-Air, and continues the technical approach of GLM-4.1V-Thinking. In 41 public visual multimodal rankings, GLM-4.5V achieved the highest performance (SOTA) among open-source models of the same level, covering common tasks such as image, video, document understanding, and GUI Agent. The model not only performs well on multimodal benchmarks but also emphasizes performance and usability in real-world scenarios.

Through efficient hybrid training, GLM-4.5V is capable of processing various types of visual content, achieving full-scenario visual reasoning, including image reasoning, video understanding, GUI tasks, complex chart and long document parsing, and grounding capabilities. The newly added "thinking mode" switch allows users to choose between fast response or deep reasoning, balancing efficiency and effectiveness.

To help developers experience the model capabilities of GLM-4.5V, Zhipu Qingyan has also open-sourced a desktop assistant application. This application can capture screenshots and record screens in real time to obtain screen information and rely on GLM-4.5V to process various visual reasoning tasks, such as code assistance, video content analysis, game solutions, and document interpretation, becoming a companion that can work and entertain with you by watching the screen.

The API of GLM-4.5V is now available on Zhipu's open platform BigModel.cn, offering all new and existing users a free resource package of 20 million Tokens. The model maintains high accuracy while considering inference speed and deployment cost, providing a cost-effective multimodal AI solution for enterprises and developers. The API call price is as low as 2 yuan per M tokens for input and 6 yuan per M tokens for output, with a response speed of 60-80 tokens/s.

In addition, GLM-4.5V demonstrates strong performance in visual localization, front-end replication, image recognition and reasoning, deep interpretation of complex documents, and GUI Agent capabilities. For example, it can accurately identify and locate target objects, replicate web pages, infer background information from subtle clues in images, read and interpret long complex texts of dozens of pages, and perform tasks such as dialogue questions and icon location in GUI environments.

The technical details of GLM-4.5V include a visual encoder, MLP adapter, and language decoder. It supports a 64K multimodal long context, accepts image and video inputs, and improves video processing efficiency through 3D convolution. The model uses a bicubic interpolation mechanism, effectively enhancing the ability to process high-resolution and extreme aspect ratio images. Additionally, it introduces 3D Rotational Positional Encoding (3D-RoPE), significantly strengthening the perception and reasoning ability for 3D spatial relationships in multimodal information.

GitHub: https://github.com/zai-org/GLM-V

Hugging Face: https://huggingface.co/collections/zai-org/glm-45v-68999032ddf8ecf7dcdbc102

ModelScope Community: https://modelscope.cn/collections/GLM-45V-8b471c8f97154e