Artificial intelligence company DeepSeek's research team announced on Monday that they have released a new experimental model called V3.2-exp, designed to significantly reduce the cost of long-context operations through an innovative "sparse attention" mechanism. This milestone progress was simultaneously released on Hugging Face and GitHub, along with a detailed academic paper.

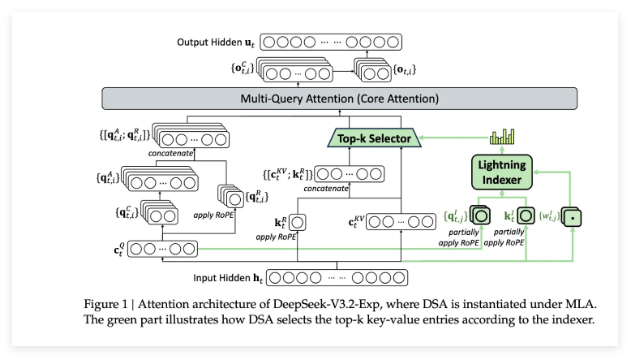

The core of this model lies in its unique DeepSeek sparse attention mechanism. This complex system consists of two parts: first, a module called "Lightning Indexer" prioritizes specific excerpts within the context window; second, an independent "fine-grained token selection system" selects key tokens from these prioritized excerpts and loads them into a limited attention window. The combination of these mechanisms allows the sparse attention model to efficiently process long context segments with lower server load.

In preliminary tests, the new model has shown significant advantages. DeepSeek reported that the cost of simple API calls in long-context operations can be reduced by up to half. Although more third-party testing is needed to verify these conclusions, due to the model being open-weighted and freely available on Hugging Face, its actual performance will soon be validated by the industry.

DeepSeek's breakthrough is one of a series of recent innovations aimed at addressing AI inference costs. Inference cost refers to the server costs of running a trained AI model, not the training costs. Unlike the R1 model, which focuses on reducing training costs, this new model emphasizes improving the operational efficiency of the basic Transformer architecture, providing a more economical solution for the popularization of AI applications.

DeepSeek has been the focus of attention in this year's AI boom. Its earlier released R1 model gained attention for its low-cost reinforcement learning training method. While the sparse attention method may not cause as much excitement as the R1 model, it provides valuable experience for global AI suppliers, helping to jointly reduce the operating costs of AI services.