European renowned AI startup Multiverse Computing has recently launched two ultra-small AI models, named after animal brain sizes - "Fly Brain" SuperFly and "Chicken Brain" ChickBrain. This company, which claims to have the smallest high-performance AI model in the world, is trying to bring artificial intelligence into every Internet of Things (IoT) device.

Multiverse Computing is based in San Sebastián, Spain, and has around 100 employees. It was founded by Román Orús, a leading European quantum computing and physics professor, quantum computing expert Samuel Mugel, and former Deputy CEO of Unnim Bank Enrique Lizaso Olmos. The company recently completed a funding round of 189 million euros (approximately 215 million US dollars) in June, and has raised approximately 250 million US dollars since its establishment in 2019.

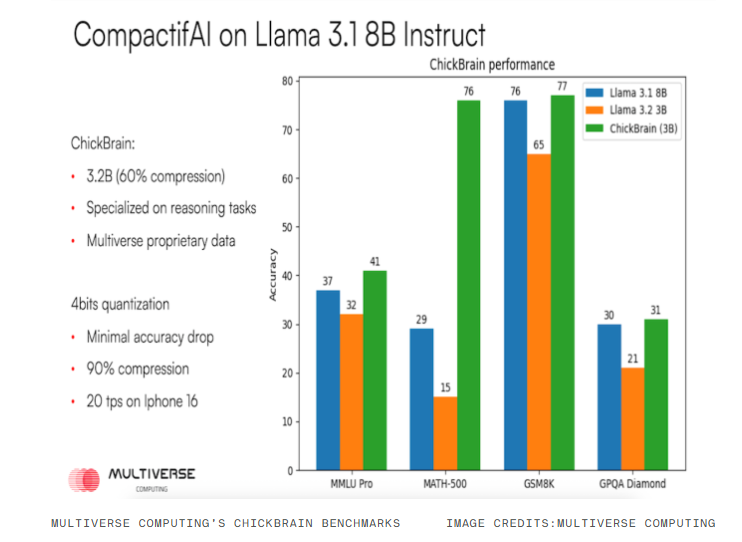

The company's core technology is a model compression technique called "CompactifAI." Orús stated that it is a compression algorithm based on quantum physics principles that can significantly reduce the size of AI models without sacrificing performance. "Our compression technology is different from traditional computer science or machine learning compression methods because we come from the field of quantum physics," he explained, "it is a more refined and detailed compression algorithm."

The SuperFly model is the smallest in the company's "Model Zoo" series, compressed from the Hugging Face open-source model SmolLM2-135. The original model has 135 million parameters, while SuperFly is compressed to 94 million parameters, which Orús humorously compared to the size of a fly's brain.

SuperFly is specifically designed for resource-constrained devices and can operate with minimal processing power. This model can be embedded in household appliances, allowing users to control devices through voice commands, such as saying "start quick wash" to a washing machine or asking for troubleshooting questions. In a live demonstration, the model worked with a simple Arduino processor to handle a voice interaction interface.

The more powerful ChickBrain model has 3.2 billion parameters and is compressed from Meta's Llama3.18B model, giving it reasoning capabilities. Despite having a larger number of parameters, the model is still small enough to run offline on a MacBook.

Surprisingly, ChickBrain performs slightly better than the original model in several standard benchmark tests, including the language skills benchmark MMLU-Pro, the math skills benchmark Math500 and GSM8K, and the general knowledge benchmark GPQA Diamond. This demonstrates the effectiveness of the company's compression technology - not only reducing the model size but also maintaining or even improving performance.

Multiverse has already started discussions with major device manufacturers. Orús revealed, "We are in talks with companies like Apple, Samsung, Sony, and HP. HP also participated in the previous round of investment." This funding round was led by the well-known European venture capital firm Bullhound Capital, with HP Technology Ventures and Toshiba among other institutions participating.

In addition to selling models directly to device manufacturers, Multiverse offers compressed model services to developers through an API hosted on AWS, usually at a lower token cost than competitors. The company has provided various machine learning compression technology services, including image recognition, to clients such as BASF, Ally, Moody's, and Bosch.

The launch of these ultra-small AI models marks an important trend in the application of artificial intelligence - the shift from cloud-based large models to small models on edge devices. By embedding AI capabilities directly into IoT devices, users can enjoy faster response times, better privacy protection, and offline intelligent experiences without needing a network connection.