On Tuesday, Meta officially announced that it will roll out AI-powered voice translation features for all users of Facebook and Instagram worldwide. This innovative technology allows creators to translate their video content into multiple languages, significantly expanding the reach of their content and opening up new technical pathways for cross-cultural communication.

This feature is now available in all markets where Meta AI services are accessible, marking a major technological breakthrough for Meta in multilingual content creation. Creators can easily achieve cross-language distribution of their content using this AI technology, without additional translation costs or technical barriers.

Meta first introduced this functionality concept at the Connect Developer Conference last year, when the company stated that it would pilot test automatic translation technology for creators' voices in short videos on Facebook and Instagram. After nearly a year of technical optimization and testing, the feature is now mature enough for global release.

In terms of technical implementation, Meta's AI translation system has a high level of voice reproduction capability. The system analyzes and maintains the original tone and speech characteristics of the creator, ensuring that the translated voice sounds natural and authentic, avoiding the mechanical issues commonly found in traditional machine translation. The application of this voice cloning technology allows the translated content to retain the original author's personal characteristics and expression style.

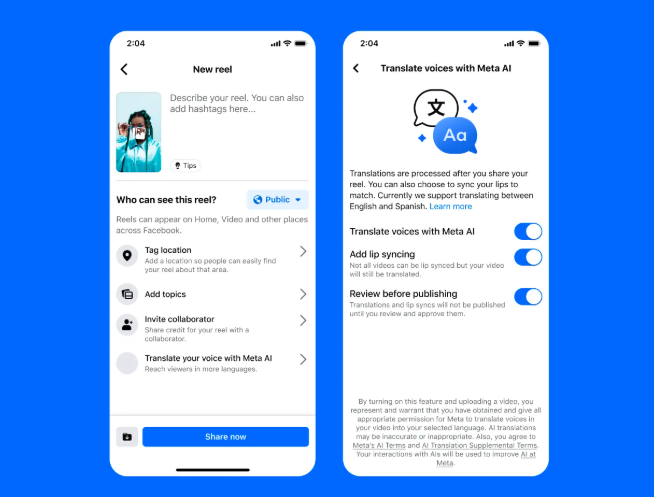

More notably, creators can also choose to enable lip-syncing functionality. This technology automatically adjusts the alignment between the translated voice and the creator's mouth movements, making the translated video look more natural and smooth, greatly enhancing the sense of authenticity in the viewing experience.

In terms of language support, the initial version supports bidirectional translation between English and Spanish, and Meta has promised to gradually add more language options. Considering the diverse needs of global users, it is expected that future versions will cover more major language markets.

The function's access settings reflect Meta's consideration for content quality. Facebook creators need to have 1,000 or more followers to use this feature, while Instagram opens it to all public accounts but limits it to regions covered by Meta AI services. This differentiated strategy ensures effective use of the feature and provides flexible support for the creative ecosystems of different platforms.

The operation process is very simple. Before posting a short video, creators just need to click on the "Use Meta AI to Translate Your Voice" option, then choose whether to enable translation and lip-syncing functions. After completing the settings, click "Share Now" to automatically generate the translated version. The entire process requires no complex technical operations, greatly lowering the usage barrier.

To ensure creators have full control over their content, the system provides complete preview and management functions. Creators can preview the translation effect and lip-syncing results before publishing their content and can turn off any function at any time. Notably, rejecting translation will not affect the normal publication and dissemination of the original video.

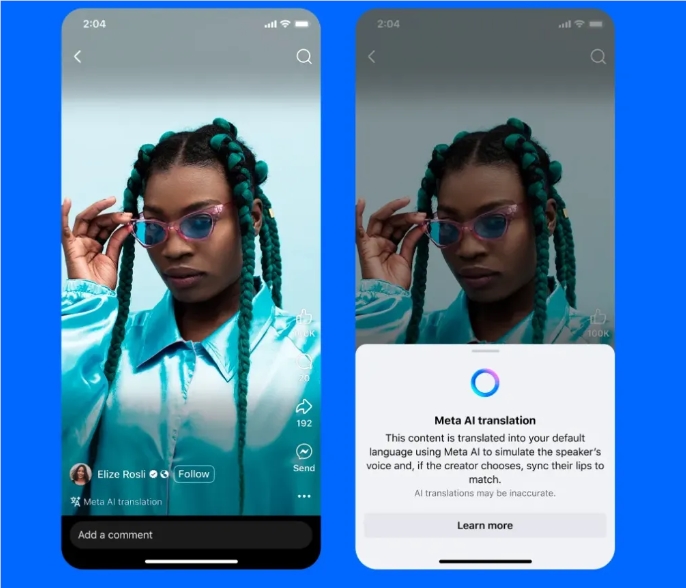

The user experience on the viewer side has also been carefully considered. Users watching translated content will see a clear label at the bottom of the video stating "Translated by Meta AI," ensuring transparency of content sources. For users who do not want to watch specific language translations, they can disable the corresponding option in the settings menu.

The addition of data analysis features provides creators with valuable audience insights. The newly added language viewership metric allows creators to accurately understand the performance of translated content in different language markets, which is of great reference value for formulating content strategies and expanding international audiences.

To ensure translation quality, Meta provides detailed usage recommendations for creators. It is recommended that creators face the camera directly, speak clearly, avoid covering their mouths, and minimize background noise or music interference. The system currently supports up to two speakers, and simultaneous speaking should be avoided to ensure translation accuracy.

In addition to AI automatic translation, Facebook creators also have the option to manually upload voiceovers. Through the "Subtitles and Translation" feature of the Meta Business Suite, creators can upload up to 20 custom voiceover tracks for a single short video, further expanding their audience reach beyond the English and Spanish markets. This manual voiceover support can be added before or after posting, providing creators with greater flexibility.

Adam Mosseri, head of Instagram, explained the value of this feature by saying: "We believe there are many excellent creators who have potential audiences with different language backgrounds. If we can help creators connect with audiences who speak other languages, breaking through cultural and language barriers, it will help them expand their follower base and gain greater value from the Instagram platform."

The launch of this feature comes at a critical time for Meta as it restructures its AI division. According to multiple reports, Meta is reorganizing its AI team into four core areas: research, superintelligence, product development, and infrastructure. The successful release of the voice translation feature demonstrates Meta's significant progress and technical capabilities in AI productization.