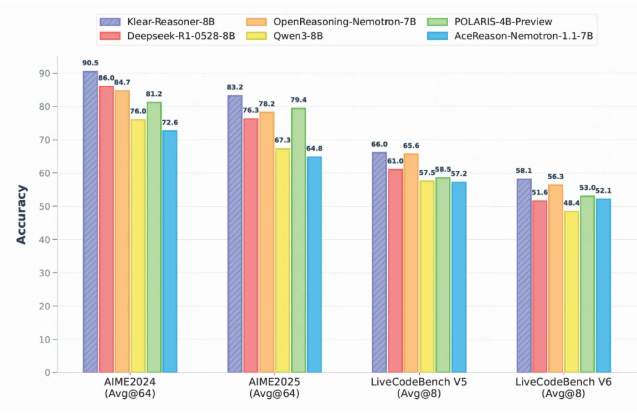

In the competition of large language models, mathematical and code reasoning capabilities have become an important evaluation standard. Kuaishou's recently released Klear-Reasoner model is based on Qwen3-8B-Base, and it has shown excellent performance in multiple authoritative benchmark tests, with its mathematical reasoning accuracy even breaking through 90%, making it a leader among models of the same scale.

The success of Klear-Reasoner stems from its use of the GPPO (Gradient-Preserving Clipping Policy Optimization) algorithm. This algorithm maintains training stability while significantly improving the model's exploration capability. Traditional clipping strategies can control the update magnitude of the model and ensure the stability of the training process, but they also have the problem of "discarding important information," which makes the model conservative. GPPO, on the other hand, allows all gradients to participate in backpropagation in a "gentle" way, preserving the possibility of exploration and accelerating the model's correction of errors.

In various benchmark tests, Klear-Reasoner has demonstrated strong power that surpasses other open-source models of the same scale. For example, in the AIME2024 test, the model achieved a high score of 90.5%, and in the AIME2025 test, it reached an excellent score of 83.2%. The Klear team also shared the detailed training process, including key aspects such as the emphasis on data quality, the strategy of retaining errors in difficult samples, and the use of soft rewards to improve the model's learning efficiency.

Notably, the Klear team found in experiments that the quality of high-quality data sources is often more important than the quantity. During the SFT phase, filtering out incorrect data and focusing on high-quality samples can improve the model's training efficiency. In addition, the team pointed out that using a soft reward strategy is more effective than a hard reward during the reinforcement learning phase, which can enhance the model's learning stability.

The release of Klear-Reasoner not only demonstrates Kuaishou's technological progress in the AI field, but also provides a reproducible path for the training of reasoning models. This achievement offers valuable experience and insights for future research and development.

Hugging Face address: https://huggingface.co/Suu/Klear-Reasoner-8B

GitHub address: https://github.com/suu990901/KlearReasoner/tree/main

Key points:

🌟 The Klear-Reasoner model has broken through 90% in mathematical reasoning accuracy, becoming a leader among 8B models.

🧠 The GPPO algorithm enhances the model's exploration ability, solving the hidden issues of traditional clipping strategies.

📈 Focusing on data quality and training strategies, the Klear team's research provides an effective training method for reasoning models.