With the continuous development of artificial intelligence technology, how to develop large-scale language model (LLM) agents capable of independently completing complex tasks has become a research hotspot.

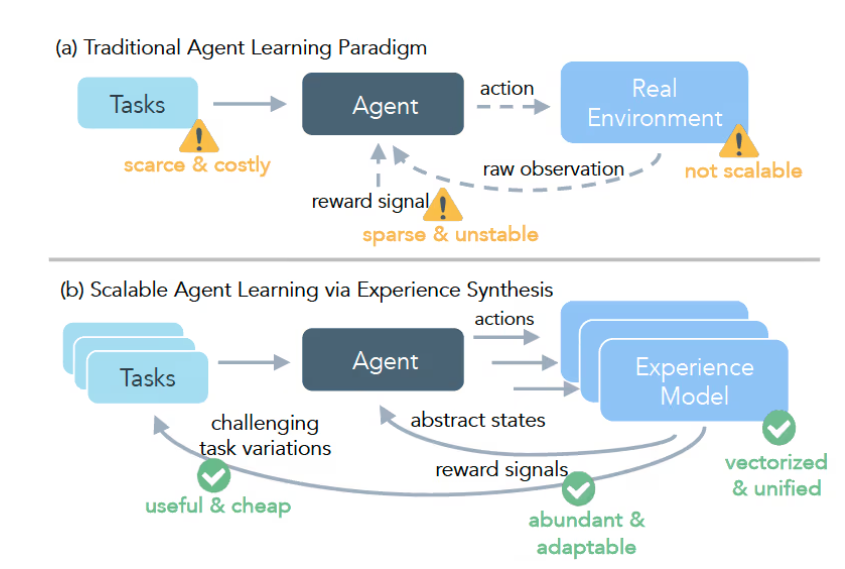

To make these agents learn like humans through exploration and interaction with the environment, researchers need a powerful and unified reinforcement learning (RL) framework. However, current research lacks an effective training method that can train agents from scratch in diverse real-world environments without relying on supervised fine-tuning (SFT).

To address this issue, the Seed research team at ByteDance introduced a new framework called AgentGym-RL, which focuses on training LLM agents through reinforcement learning to enable multi-turn interactive decision-making. The framework features a modular and decoupled architecture, offering high flexibility and scalability. AgentGym-RL covers multiple real-world scenarios and supports mainstream reinforcement learning algorithms, helping agents significantly improve their decision-making capabilities.

To further optimize the training effectiveness, the research team also proposed a training method called ScalingInter-RL. This method adjusts the number of interactions in stages, helping agents focus on mastering basic skills in the early stages and gradually increasing the number of interactions to encourage more diverse problem-solving strategies. This balanced design of exploration and exploitation helps agents maintain stable learning and decision-making abilities when facing complex tasks.

In the experiments, the researchers used Qwen2.5-3B and Qwen2.5-7B as base models to evaluate the performance of AgentGym-RL and ScalingInter-RL in five different scenarios. The results showed that agents using AgentGym-RL outperformed multiple commercial models in 27 tasks. The research team plans to open source the entire AgentGym-RL framework, including code and datasets, to support more researchers in developing intelligent agents.

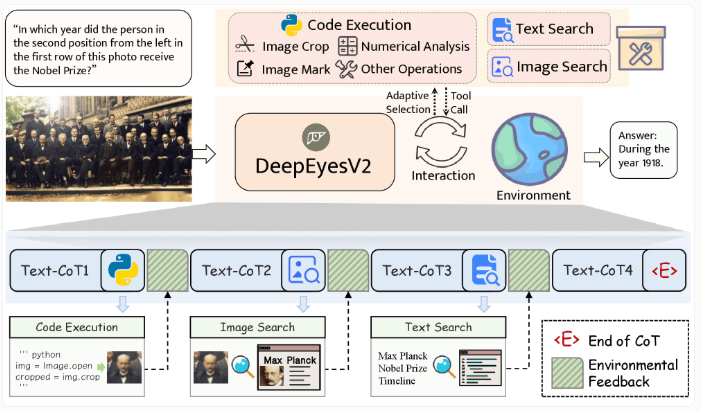

The AgentGym-RL framework involves various scenarios, including network navigation, deep search, digital games, sensory tasks, and scientific experiments. Agents must possess strong decision-making and adaptability capabilities to complete complex tasks in these scenarios.

Project: https://agentgym-rl.github.io/

Key Points:

🌐 The AgentGym-RL framework provides a new approach aimed at training large-scale language model agents through reinforcement learning, enhancing their decision-making capabilities for complex tasks.

🔄 The ScalingInter-RL training method balances exploration and exploitation during training by adjusting the number of interactions in stages.

🏆 Experimental results show that the AgentGym-RL framework significantly improves agent performance, surpassing multiple commercial models and demonstrating capabilities comparable to top proprietary large models.