Recently, the open-source community welcomed LLaVA-OneVision-1.5, a new multimodal model that marks a major technological advancement. The LLaVA (Large Language and Vision Assistant) series has been developed over two years, gradually evolving from simple image-text alignment models into a comprehensive framework capable of handling various input forms such as images and videos.

LLaVA-OneVision-1.5's core philosophy is to provide an open, efficient, and reproducible training framework, allowing users to easily build high-quality vision-language models. Its training process is divided into three stages: first, in the pre-training phase of language-image alignment, the model learns to convert visual features into linguistic word embeddings.

Next, in the second stage "high-quality knowledge learning," the model is trained on 85 million training samples, injecting a large amount of visual and knowledge information, significantly enhancing its capabilities. Finally, in the visual instruction fine-tuning stage, the model is trained using a carefully designed dataset, enabling it to handle various complex visual instructions.

In terms of efficiency, the team adopted an innovative offline parallel data packaging method, significantly improving training efficiency. With 85 million samples, the data processing compression ratio reached as high as 11 times, and the training process could be completed in just 3.7 days. Meanwhile, LLaVA-OneVision-1.5 also uses RICE-ViT as the visual encoder, which has regional perception for visual understanding, making it especially suitable for processing text in documents.

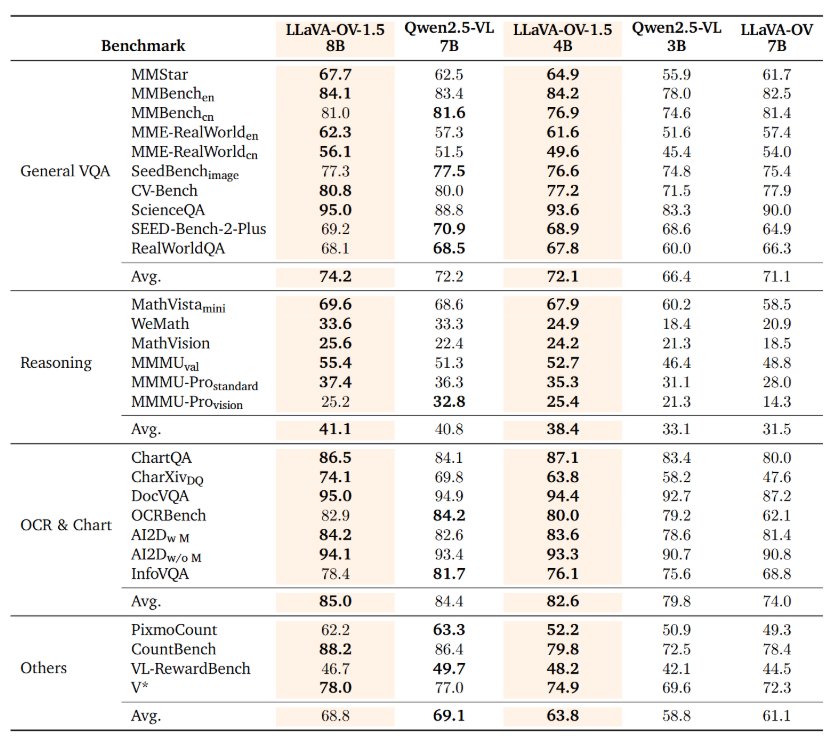

Data is the foundation of model capabilities. The pre-training dataset of LLaVA-OneVision-1.5 is diverse and wide-ranging, and it introduces a "concept-balanced" sampling strategy to ensure balanced performance across various tasks. This model performs excellently in various benchmark tests, especially the 8-billion-parameter version, which outperformed Qwen2.5-VL in 27 benchmarks.

Project:

https://github.com/EvolvingLMMs-Lab/LLaVA-OneVision-1.5

https://huggingface.co/lmms-lab/LLaVA-OneVision-1.5-8B-Instruct

Key Points:

🌟 LLaVA-OneVision-1.5 is the latest open-source multimodal model, capable of handling multiple inputs such as images and videos.

📈 The training process is divided into three stages, aiming to efficiently enhance the model's visual and language comprehension abilities.

🏆 LLaVA-OneVision-1.5 performs excellently in benchmark tests, surpassing the Qwen2.5-VL model.