【AIbase Report】Amazon announced on Wednesday that it is developing an AI smart glasses for its delivery drivers, aiming to improve delivery efficiency and safety by "freeing up hands". This glasses can help drivers complete tasks such as package scanning, route navigation, and delivery confirmation without frequently checking their phones.

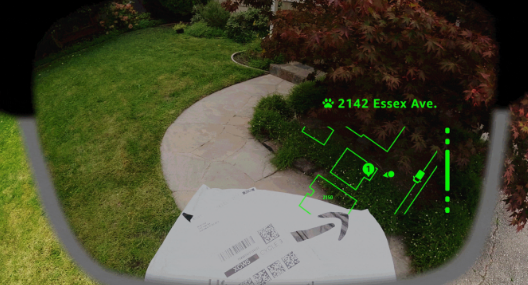

According to Amazon, the smart glasses integrate AI sensing functions, computer vision, and camera systems, and can display real-time information about road hazards, delivery tasks, and surrounding environments. When drivers arrive at the delivery location, the glasses will automatically activate, helping them quickly locate packages in the car and navigate to the correct address. In multi-unit apartments or complex commercial buildings, the glasses can also provide accurate indoor guidance.

The device pairs with a controller worn on the delivery vest, which includes operation buttons, replaceable batteries, and an emergency assistance button. In addition, the glasses support automatic dimming and prescription lens customization, and can intelligently adapt to different environments based on changes in light.

Currently, Amazon is conducting field tests of this glasses in North America and plans to gradually expand it after refining the technology. Future versions will also introduce a real-time defect detection feature, which will proactively alert drivers when they mistakenly deliver a package or encounter potential risks (such as pets or low-light environments).

Notably, on the same day, Amazon also launched two new technologies: the mechanical arm "Blue Jay", which can collaborate with warehouse staff, and the AI tool Eluna, used for warehouse operational insights, further demonstrating this tech giant's comprehensive layout in the smart logistics field.