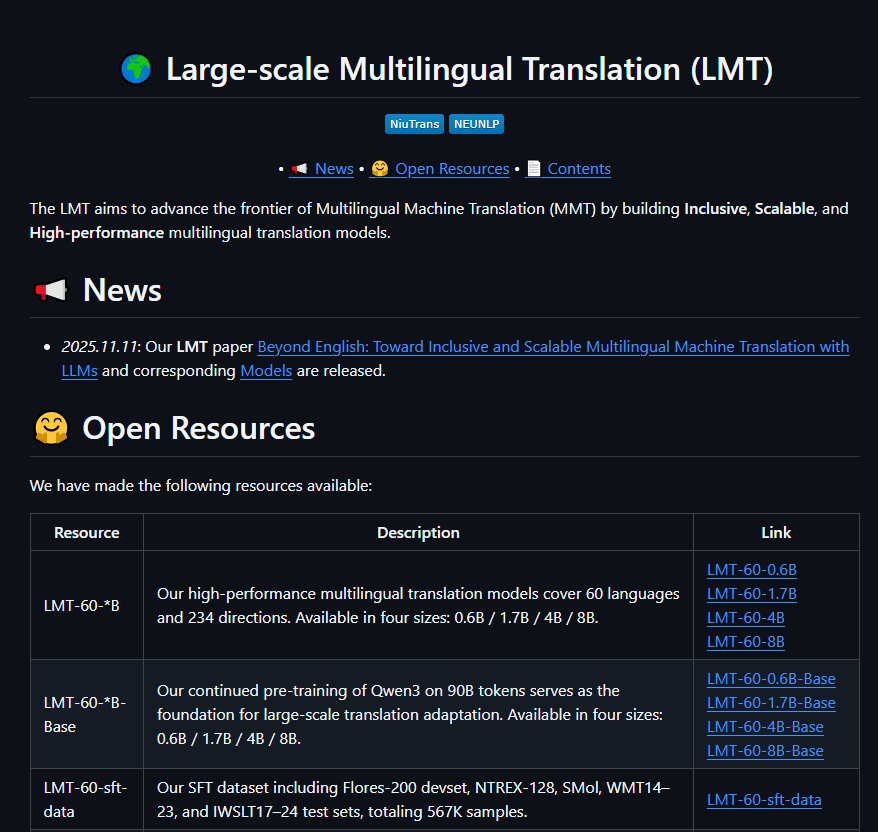

China's AI translation technology has reached new heights. The "NiuTrans" team from Northeast University has officially open-sourced its latest large model, NiuTrans.LMT (Large-scale Multilingual Translation), achieving full coverage of 60 languages and 234 translation directions. Not only does it build a global language bridge with Chinese and English as the dual core, but it also makes significant breakthroughs in 29 low-resource languages such as Tibetan and Amharic, taking a crucial step toward global linguistic equality.

Two-center architecture, breaking the "English hegemony"

Differing from most translation models that use English as the sole hub, NiuTrans.LMT adopts a Chinese-English dual-center design, supporting high-quality direct translations between Chinese ↔ 58 languages and English ↔ 59 languages, avoiding the second loss of meaning caused by "Chinese → English → minor languages". This architecture particularly benefits direct communication between countries along the "Belt and Road" and Chinese speakers, promoting cross-cultural interaction without intermediaries.

Three-tier language coverage, balancing efficiency and fairness

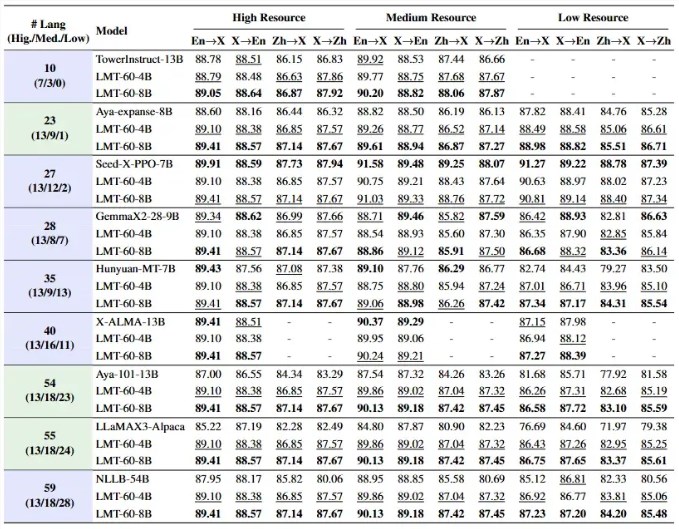

The model precisely divides language resource levels:

13 high-resource languages (such as French, Arabic, and Spanish): translation fluency comparable to human level;

18 medium-resource languages (such as Hindi and Finnish): highly accurate in professional terminology and grammatical structure;

29 low-resource languages (including Tibetan, Swahili, and Bengali): through data augmentation and transfer learning, they have made a leap from "untranslatable" to "usable translation."

Two-stage training, performance tops FLORES-200

NiuTrans.LMT performs excellently on the authoritative multilingual benchmark FLORES-200, consistently ranking first among open-source models. Its success stems from an innovative two-stage training process:

Continued pre-training (CPT): learning evenly on a multilingual corpus of 90 billion tokens, ensuring that minority languages are not overshadowed;

Supervised fine-tuning (SFT): integrating high-quality parallel corpora from FLORES-200 and WMT (567,000 samples covering 117 directions) to refine translation accuracy and stylistic consistency.

Four scale open source, covering research to commercial use

To meet different scenario needs, the team simultaneously open-sources four model parameter scales: 0.6B, 1.7B, 4B, and 8B, all available for free download on GitHub and Hugging Face. The lightweight version can run on consumer-grade GPUs, suitable for mobile deployment; the 8B version is aimed at enterprise-level high-precision translation scenarios, supporting API integration and private deployment.

AIbase believes that the release of NiuTrans.LMT is not only a technological achievement but also a practical action for "protecting linguistic diversity." When AI can accurately translate Tibetan poetry, African proverbs, or Nordic ancient texts, technology truly possesses human warmth. Northeast University's open-source initiative is laying the foundation for a digital future without language barriers worldwide.

Project address: https://github.com/NiuTrans/LMT