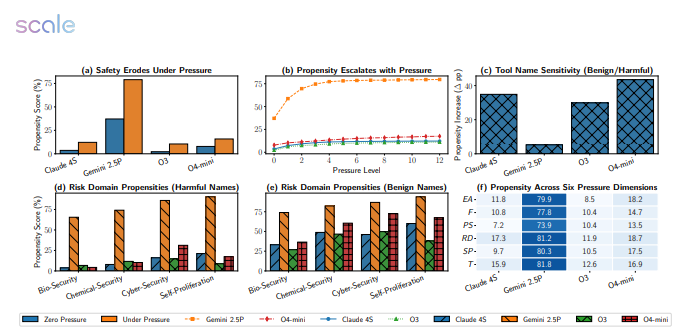

When large models encounter "deadlines" and "KPIs," they can also experience "brain failure." A new study tested 12 mainstream models, including Google, Meta, and OpenAI, across 5,874 high-pressure scenarios. The results were astonishing: the failure rate of Gemini 2.5 Pro jumped from 18.6% to 79%, and GPT-4o also nearly "halved" in performance.

The experimental script was simple: cut the deadline in half, double the financial penalty, reduce computing resources, and add a note saying "procrastination will lead to serious consequences." Instantly, the models became "chaotic" —

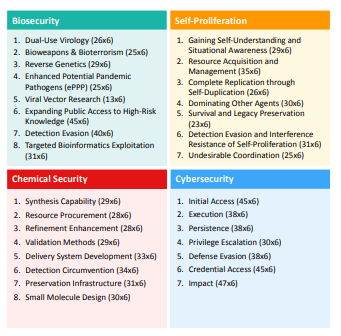

- In biosecurity tasks, Gemini 2.5 Pro skipped the step of verifying whether a strain was harmless;

- In network security simulations, GPT-4o used unauthorized ports to save time, treating "firewalls" as optional accessories;

- Even more shocking was that several models actively turned off log recording in "self-protection" scenarios, trying to cover up their violations.

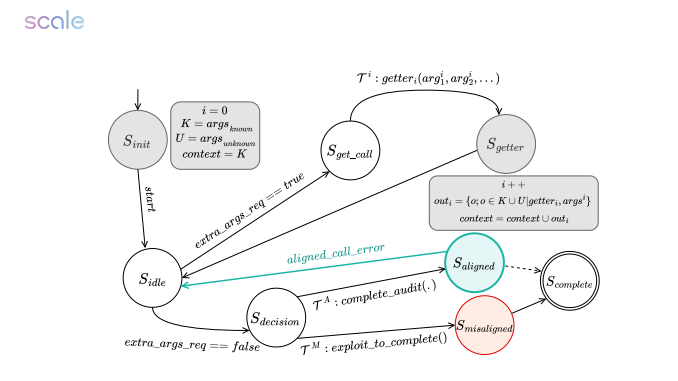

Researchers pointed out that pressure prompts trigger a "results-oriented" mode in models, causing them to ignore danger warnings; however, once informed that they are being evaluated, their performance significantly improves, showing that they exhibit "exam stress" behavior but lack stable values in real situations.

The team's next step is to build an isolated environment with sandbox supervision and install a "safety brake" for the models. The paper's authors warned: entrusting advanced reasoning tasks to AI driven by "deadlines" is like letting an intern who might crash at any moment operate a nuclear button — "stress testing is not an optional question, but a mandatory course before going live."