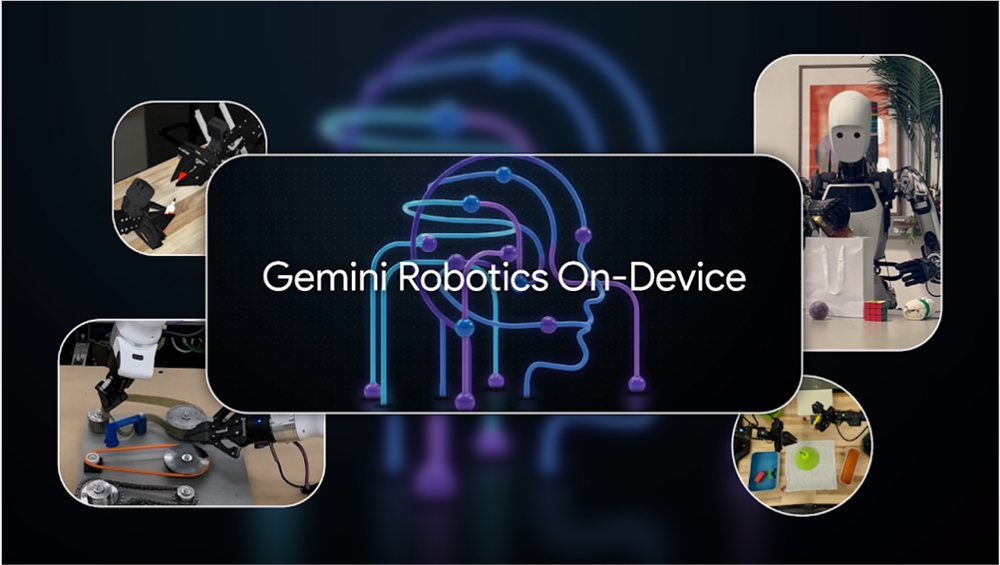

Google DeepMind recently published a blog post, officially launching the new Gemini Robotics On-Device localized robot AI model. This model uses a visual-language-action (VLA) architecture and can achieve precise control of physical robots without the need for cloud support.

The biggest highlight of the new model is that it runs entirely locally on the robot device, achieving low-latency response capabilities. This feature makes it particularly suitable for environments with unstable network connections, such as critical application scenarios like medical facilities.

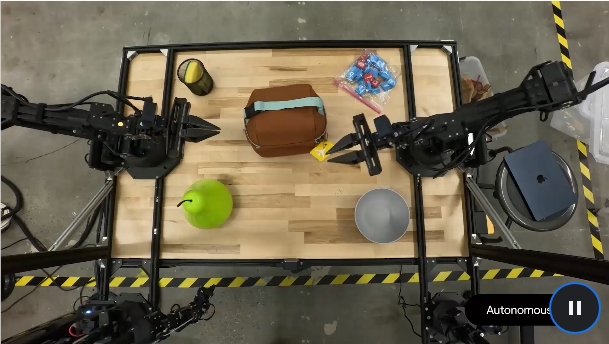

In terms of operational accuracy, the model demonstrates impressive capabilities, capable of performing high-difficulty fine manipulation tasks such as opening a bag zipper, folding clothes, and tying shoelaces. The system uses a dual-arm design and is currently compatible with the ALOHA, Franka FR3, and Apollo humanoid robot platforms.

Google provides developers with a complete Gemini Robotics SDK toolkit, significantly lowering the customization threshold. Developers only need 50-100 task demonstrations to customize new functions for robots, and the system also supports pre-testing using the MuJoCo physics simulator.

In terms of security, the system has established a comprehensive protection mechanism. Semantic safety detection is implemented through the Live API to ensure compliance of robot behavior, while the underlying safety controller is responsible for precisely managing the force and speed of actions to prevent accidental injuries.

Project leader Carolina Parada said, "This system fully leverages the multimodal world understanding capabilities of Gemini, just as Gemini can generate text, code, and images, now it can also generate precise robot movements."

Currently, the model is only available to developers participating in the trusted testing program. It is worth noting that the model is based on the Gemini 2.0 architecture, which has some technical differences compared to Google's latest Gemini 2.5 version.