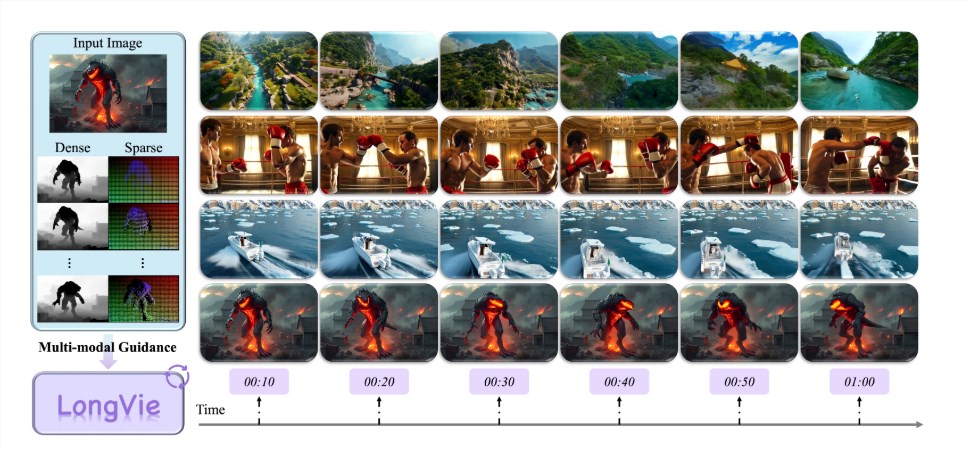

Over the past two years, video generation technology has experienced remarkable progress, especially in short video creation. However, creating high-quality, stylistically consistent long videos exceeding one minute remains a significant challenge. To address this, the Shanghai Artificial Intelligence Laboratory, in collaboration with Nanjing University, Fudan University, Nanyang Technological University S-Lab, and NVIDIA, jointly introduced the LongVie framework, systematically solving core issues in this field.

The goal of LongVie is to make the generation of long videos more controllable and consistent. The team found that traditional video generation models often face challenges such as temporal inconsistency and visual degradation when handling long videos. The former mainly manifests as inconsistencies in image details and content, while the latter refers to a decline in color and clarity as the video length increases.

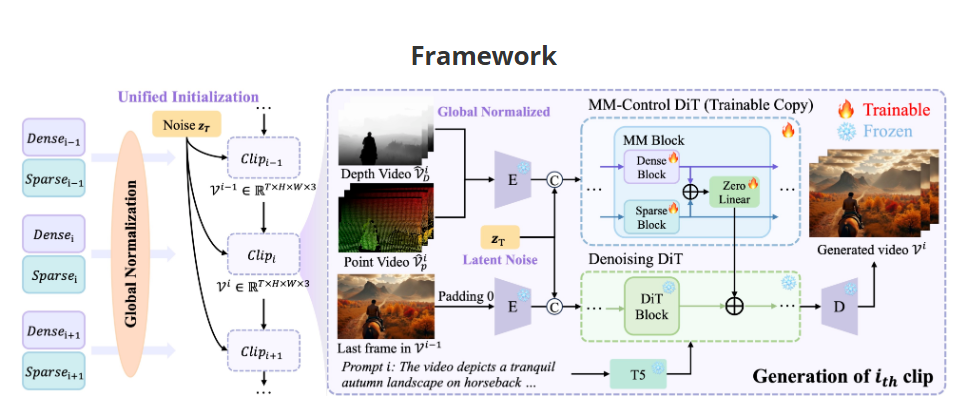

To solve these problems, LongVie approaches them from two aspects: "control signals" and "initial noise." First, the team proposed a "global normalization of control signals" strategy, meaning that during video generation, normalization is no longer performed within a single segment but rather across the entire video segment, thereby improving coherence between segments. Second, they introduced a "unified noise initialization" strategy, ensuring that all segments share the same initial noise, thus reducing visual drift between different segments from the source.

In addressing the issue of visual degradation, LongVie adopted a multimodal fine-grained control approach. Single-modal control often fails to provide stable constraints, whereas LongVie combines dense control signals (such as depth maps) with sparse control signals (such as key points), and introduces a degradation-aware training strategy, enabling the model to maintain higher image quality and detail when processing long videos.

Additionally, LongVie launched LongVGenBench, the first benchmark dataset specifically designed for controllable long video generation, containing 100 high-resolution videos lasting over one minute. It aims to promote research and evaluation in this field. According to quantitative metrics and user evaluations, LongVie outperformed existing technologies in multiple assessments, earning high user preference and reaching SOTA (state-of-the-art) levels.

With the release of the LongVie framework, the era of long video generation is about to enter a new stage, allowing creators to realize their creativity with greater freedom.

Project URL: https://vchitect.github.io/LongVie-project/