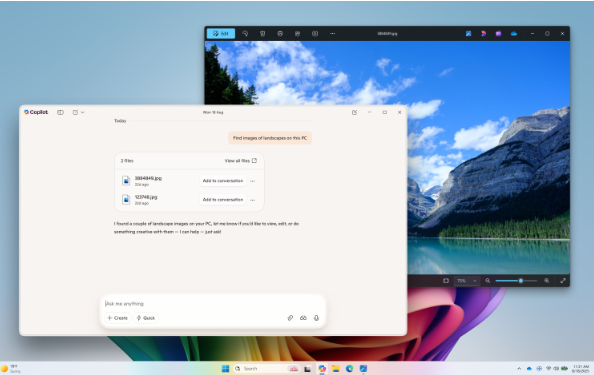

Liquid AI officially launches LFM2-VL, a new series of visual language foundation models optimized for low latency and device-adaptive deployment. The released LFM2-VL models include two efficient variants: LFM2-VL-450M and LFM2-VL-1.6B, marking significant progress in the application of multimodal AI on smartphones, laptops, wearables, and embedded systems without sacrificing speed and accuracy.

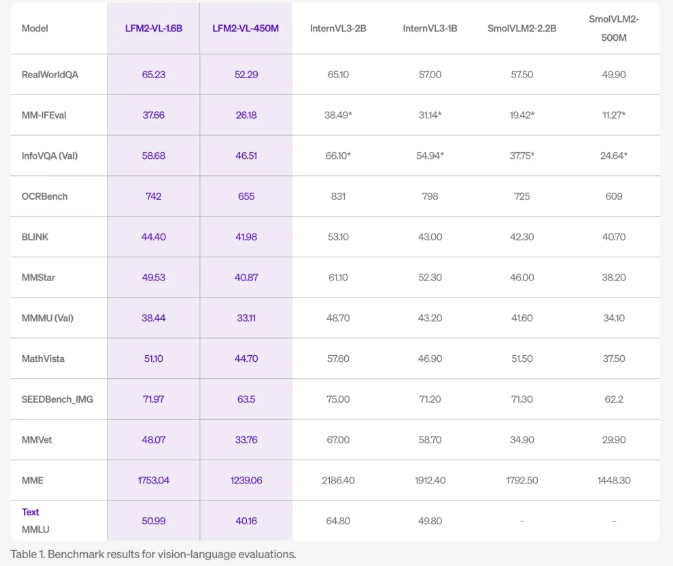

LFM2-VL models are carefully designed, achieving twice the GPU inference speed compared to existing visual language models while maintaining competitive benchmark performance on tasks such as image description, visual question answering, and multimodal reasoning. The 450M parameter version is designed for resource-constrained environments, while the 1.6B parameter version provides stronger capabilities while remaining lightweight, suitable for single GPU or high-end mobile devices.

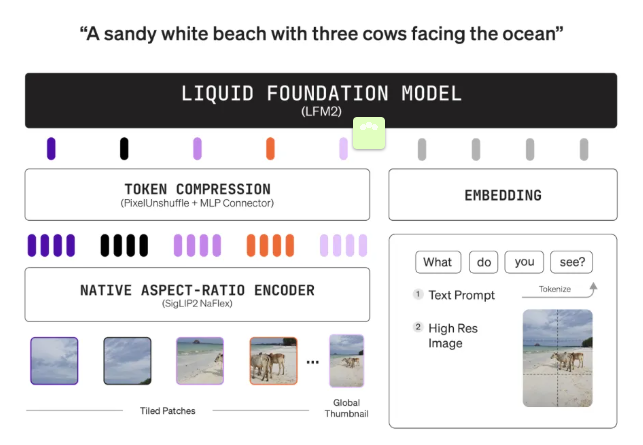

The technological innovation of LFM2-VL adopts a modular architecture, combining a language model backbone (LFM2-1.2B or LFM2-350M), SigLIP2NaFlex visual encoder (400M or 86M parameters), and a multimodal projector. It uses "pixel unmixing" technology to dynamically reduce the number of image tokens, achieving faster processing speed. In addition, the model can process images at their original resolution, up to 512×512 pixels, avoiding distortion caused by scaling. Larger images will be split into non-overlapping 512×512 patches, ensuring the preservation of details and aspect ratio. The 1.6B version also encodes a scaled-down thumbnail of the entire image to provide global context understanding.

The flexible inference capability of LFM2-VL models allows users to adjust the balance between speed and quality during inference, adapting to device capabilities and application needs. The model is pre-trained, jointly trained to integrate visual and language capabilities, and finally fine-tuned on approximately 100 billion multimodal tokens, ensuring excellent performance in image understanding.

In public benchmark tests, LFM2-VL performs comparably to large models like InternVL3 and SmolVLM2, but with smaller memory usage and faster processing speed, making it ideal for edge and mobile applications. Both models are open-weight and can be downloaded on Hugging Face for research and commercial use. For large enterprises, a commercial license must be obtained by contacting Liquid AI. These models are seamlessly integrated with Hugging Face Transformers and support quantization to further enhance efficiency on edge hardware.

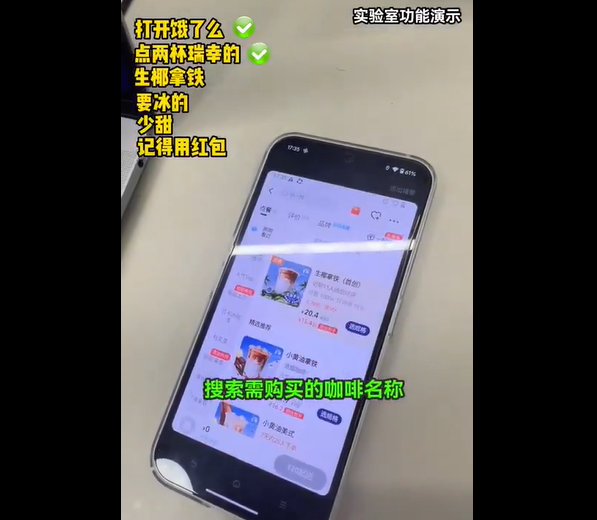

LFM2-VL aims to help developers and enterprises quickly, accurately, and efficiently deploy multimodal AI on devices, reducing reliance on the cloud and promoting new applications such as robotics, the Internet of Things, smart cameras, and mobile assistants.

huggingface:https://huggingface.co/collections/LiquidAI/lfm2-vl-68963bbc84a610f7638d5ffa

Key Points:

🌟 LFM2-VL models offer ultra-efficient GPU inference speed, twice as fast as existing models, suitable for various devices.

🖼️ Supports processing images at original resolution and handling large images, ensuring no loss of detail.

🚀 Both models are open-weight and can be downloaded on Hugging Face, suitable for research and commercial applications.