On August 20, 2025, the Seed team of ByteDance announced the launch of a series of open-source large language models - Seed-OSS. This series of models is designed to meet the needs of internationalization (i18n) application scenarios, focusing on strong long-text understanding, reasoning capabilities, and flexible developer-friendly features.

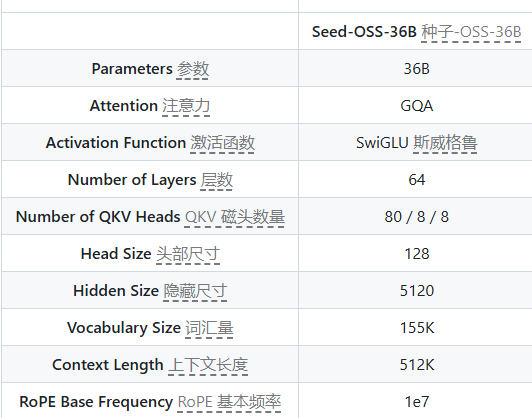

The Seed-OSS series of models is based on a popular causal language model architecture, employing RoPE, GQA attention mechanism, RMSNorm, and SwiGLU activation function. The latest released Seed-OSS-36B model has 36 billion parameters and a long context processing capability of 512K. Despite using only 12 trillion training data, it performs well in multiple popular benchmark tests.

The Seed-OSS model series includes two versions: Seed-OSS-36B-Base with synthetic instruction data and Seed-OSS-36B-Base-woSyn without synthetic instruction data. This design not only provides developers with a high-performance base model but also offers researchers a more diverse choice to ensure the effectiveness of their research without being affected by synthetic data.

One of the key features of this model is the flexible control of "thinking budget," allowing users to dynamically adjust the length of reasoning according to their needs. This capability greatly improves the efficiency of reasoning in practical application scenarios. In addition, Seed-OSS is specially optimized for reasoning tasks, ensuring that while maintaining good general capabilities, the reasoning ability is also enhanced.

During the launch, the Seed team emphasized that the Seed-OSS model is not only suitable for academic research but can also be widely applied to various development tasks, such as tool usage and problem-solving agent intelligence tasks. The training and evaluation results of the model show that Seed-OSS achieves leading performance in open-source fields in tasks such as knowledge QA, mathematical reasoning, and programming.

For developers who want to participate, the Seed team provides detailed quick start guides. Users just need to install the relevant dependencies via pip, and they can easily download and use the Seed-OSS model. In addition, the team supports multiple quantization methods to reduce memory usage and improve the model's runtime efficiency.

In conclusion, the release of Seed-OSS brings strong support to the open-source community. The ByteDance Seed team looks forward to promoting innovation and development in the field of artificial intelligence through this series of high-performance language models, providing developers and researchers with more extensive tools and resources.

Address: https://github.com/ByteDance-Seed/seed-oss