According to foreign media reports, Apple's research team recently released the SlowFast-LLaVA model adaptation, which shows excellent performance in long video analysis tasks, even surpassing models with larger parameter scales. This breakthrough provides an efficient new solution for long video content analysis.

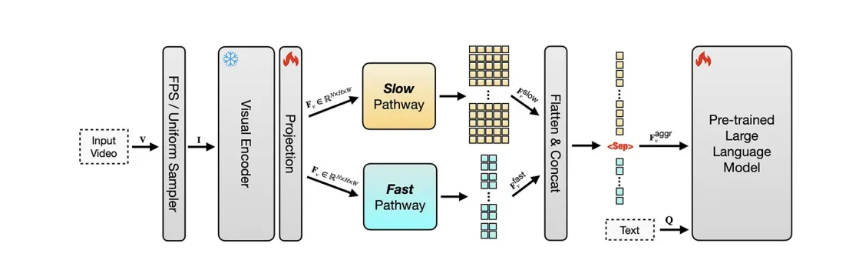

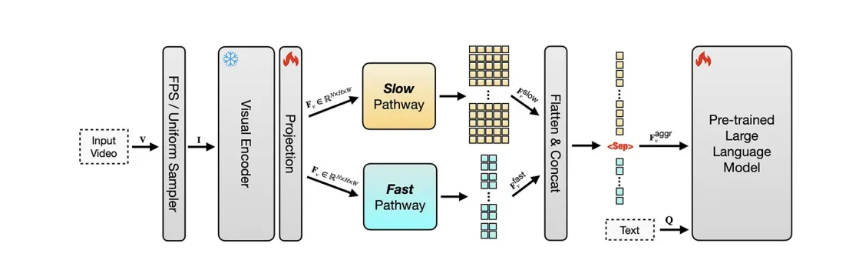

The core advantage of this model lies in its dual-stream architecture, which effectively solves issues of information redundancy and context window overflow in traditional frame-by-frame processing. The slow stream captures static details and background information at a low frame rate, while the fast stream tracks rapid changes in actions at a high frame rate. This collaborative working mode greatly optimizes video processing efficiency.

In long video benchmark tests, SlowFast-LLaVA demonstrated outstanding performance. Its 1 billion, 3 billion, and 7 billion parameter versions all achieved excellent results. For example, the model with only 1 billion parameters scored 56.6 on the General VideoQA task of LongVideoBench, while the 7 billion parameter version achieved a high score of 71.5 on the Long-Form Video Understanding task. In addition to video understanding, the model also performs well in image understanding tasks such as knowledge reasoning and OCR.

Although the model performs well, it still has certain limitations, such as a maximum input frame length of 128 frames, which may lead to the omission of key information. Apple's team stated that they will continue to explore memory optimization technologies to improve model performance.

SlowFast-LLaVA is trained on publicly available datasets and has been open-sourced, providing new ideas and efficient tools for the AI community in the field of long video understanding.