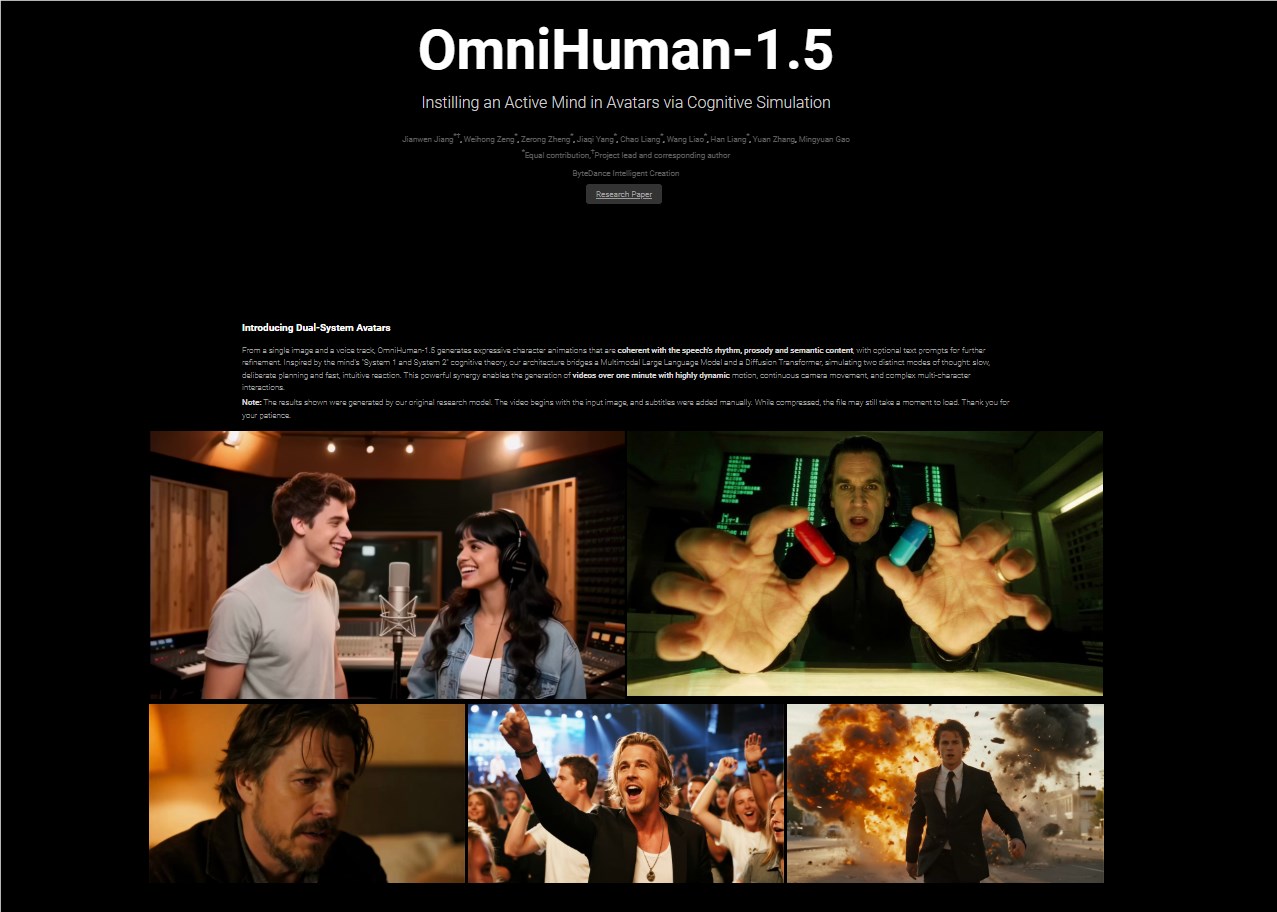

Recently, the ByteDance Digital Human team has launched the highly anticipated OmniHuman-1.5, an upgraded version of its predecessor OmniHuman-1. This new multimodal digital human solution has once again sparked a trend in the field of AI video generation. OmniHuman-1.5 generates highly realistic dynamic videos by combining a single image and audio input. It not only improves the coordination and expressiveness of movements but also introduces several groundbreaking features, bringing new possibilities to fields such as film production, virtual anchors, education and training, and advertising marketing.

Project address: https://omnihuman-lab.github.io/v1_5/

Technical upgrades: Significant improvement in realism and generalization capability

OmniHuman-1.5 continues the core technology of its predecessor, generating vivid character videos through a single image and audio. Compared to the previous generation, OmniHuman-1.5 has achieved significant improvements in realism and generalization capability. Thanks to the optimized multimodal motion conditional mixing training strategy by the ByteDance team, the generated videos are more natural in movement, lip synchronization, and emotional expression. Whether it's a real person or an animated character, OmniHuman-1.5 can generate dynamic effects that match the audio content, demonstrating high visual quality.

Breakthrough features: Dual-person scenes and long video generation

Support for dual-person audio driving is one of the highlights of OmniHuman-1.5. Traditional AI video generation technology is mostly limited to single-person scenarios, while OmniHuman-1.5 has achieved video generation based on dual-person audio input for the first time, accurately capturing interaction actions and expressions between multiple characters, providing technical support for multi-character performance scenarios. In addition, this technology supports generating videos longer than one minute, ensuring continuity and identity consistency of long videos through inter-frame connection strategies, meeting more complex application needs, such as speech videos and music MVs.

Emotional perception and text prompts: A smarter creative experience

OmniHuman-1.5 goes beyond mechanical movement generation, perceiving emotions in the audio and expressing them through video. For example, based on the tone and emotion of the audio, the system can automatically adjust the character's facial expressions and body movements, making the video more expressive. At the same time, the newly added text prompt feature allows users to further customize video content through text descriptions, such as specifying scene styles or action details, providing creators with greater flexibility.

Support for multiple styles: Full coverage from real people to non-real people

In addition to real people, OmniHuman-1.5 performs exceptionally well in handling non-real characters, such as anime characters or 3D cartoon figures. The system maintains natural consistency of movements across different artistic styles, ensuring that lip movements and actions synchronize perfectly with the audio. This feature makes it widely applicable in gaming, virtual reality (VR), and augmented reality (AR) scenarios, offering users an immersive experience.

Wide application: Empowering content creation across multiple industries

The potential applications of OmniHuman-1.5 are exciting. In the film production field, it can be used for character animation and special effects creation, quickly generating virtual actor videos synchronized with audio; in virtual anchors and entertainment scenarios, creators can use it to generate lively character images, enhancing live interaction; in education and training, OmniHuman-1.5 can generate teaching videos with vivid body language, improving the attractiveness and understandability of content; in advertising and marketing, customized virtual characters can help brand promotion, significantly increasing conversion rates.

Technical prospects and challenges

Although OmniHuman-1.5 has made significant breakthroughs in technology, it still faces some challenges. For example, the randomness of the association between audio and actions may lead to some movements that are not natural enough, and the authenticity of object interactions also needs further optimization. Additionally, the high computational resource requirements may limit its popularity on ordinary devices. The ByteDance team stated that in the future, they will further improve system performance and user experience by introducing more fine-grained motion control, enhancing physical constraint modeling, and model compression technologies.