On August 28, Tencent Hunyuan announced the open source of the end-to-end video sound effect generation model HunyuanVideo-Foley. This model can match movie-level sound effects to videos by inputting videos and text, bringing a new breakthrough in video creation. Users just need to input corresponding text descriptions, and HunyuanVideo-Foley can generate audio that accurately matches the visuals, breaking the limitations of AI-generated videos being only "seen" but not "heard," making silent AI videos a thing of the past.

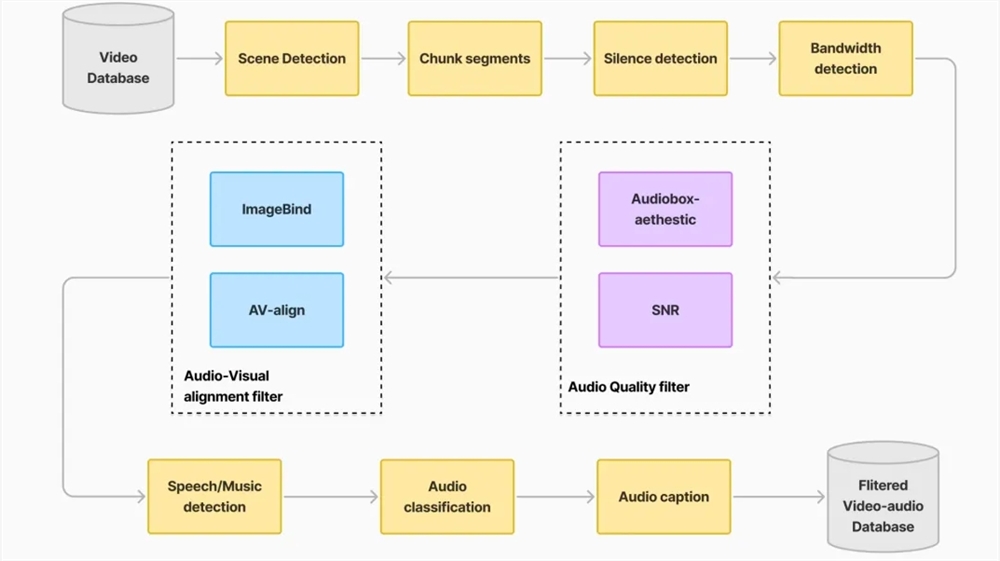

The emergence of HunyuanVideo-Foley solves three major pain points faced by existing audio generation technologies. First, it enhances the model's generalization ability by building a large-scale high-quality TV2A (text-video-audio) dataset, enabling it to adapt to various types of videos such as people, animals, natural landscapes, and cartoons, and generate audio that accurately matches the visuals. Second, the model adopts an innovative dual-stream multimodal diffusion transformer (MMDiT) architecture, which balances text and video semantics to generate richly layered composite sound effects, avoiding the problem of audio being out of sync with the scene due to over-reliance on text semantics. Finally, HunyuanVideo-Foley improves the quality and stability of audio generation by introducing a representation alignment (REPA) loss function, ensuring professional-level audio fidelity.

In multiple authoritative evaluation benchmarks, HunyuanVideo-Foley's performance is comprehensively leading, with the audio quality metric PQ increasing from 6.17 to 6.59, the visual semantic alignment metric IB from 0.27 to 0.35, and the temporal alignment metric DeSync from 0.80 to 0.74, all reaching new SOTA levels. In subjective evaluations, the model achieved average opinion scores exceeding 4.1 points (out of 5) in three dimensions: audio quality, semantic alignment, and temporal alignment, demonstrating audio generation effects close to professional standards.

The open sourcing of HunyuanVideo-Foley provides the industry with a reusable technical paradigm, accelerating the application of multimodal AI in the content creation field. Short video creators can instantly generate contextual sound effects, film teams can quickly complete ambient sound design, and game developers can efficiently build immersive auditory experiences.

Currently, users can download the model on Github and HuggingFace, or directly experience it on the Hunyuan official website.

Experience entry: https://hunyuan.tencent.com/video/zh?tabIndex=0

Project website: https://szczesnys.github.io/hunyuanvideo-foley/

Code: https://github.com/Tencent-Hunyuan/HunyuanVideo-Foley

Technical report: https://arxiv.org/abs/2508.16930

Hugging Face: https://huggingface.co/tencent/HunyuanVideo-Foley