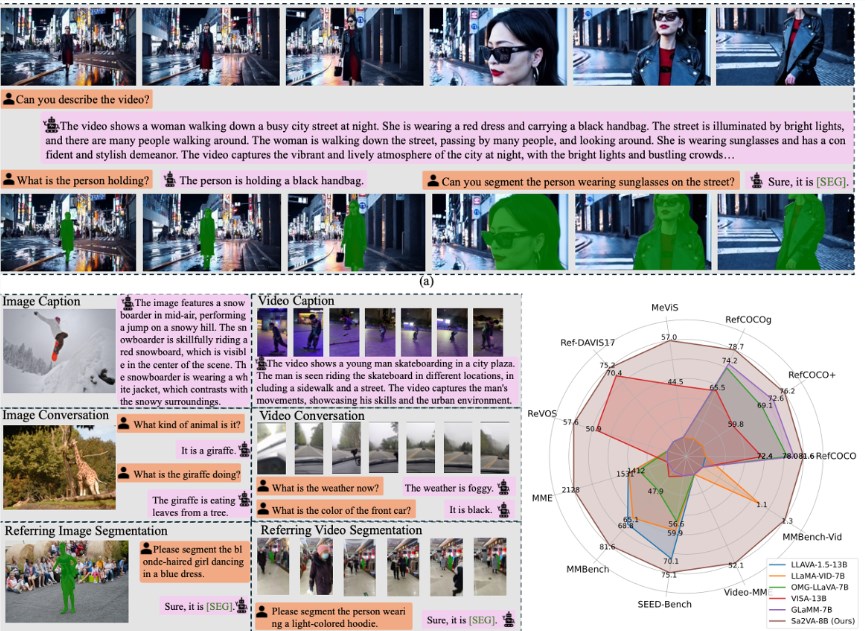

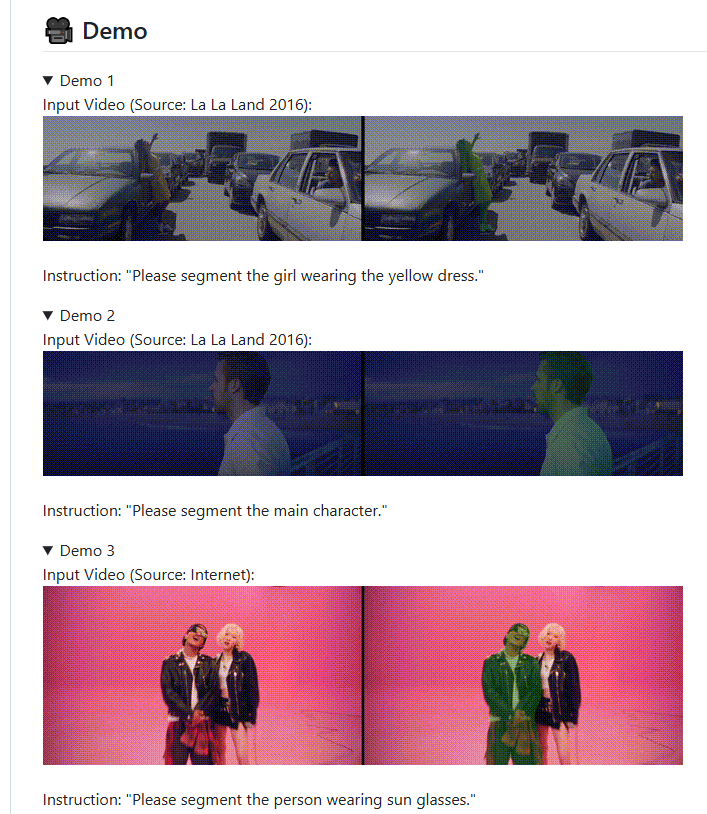

In recent AI technological advancements, ByteDance has collaborated with research teams from multiple universities to combine the advanced visual language model LLaVA with the segmentation model SAM-2, introducing a new model called Sa2VA. This innovative model not only understands video content but can also precisely track and segment characters and objects in videos based on user instructions.

LLaVA, as an open-source visual language model, excels at macro-level storytelling and content understanding in videos, but it struggles with detailed instructions. SAM-2, on the other hand, is an excellent image segmentation expert that can identify and segment objects in images, but lacks language comprehension capabilities. To address these shortcomings, Sa2VA effectively combines the two models through a simple and efficient "code" system.

The architecture of Sa2VA can be viewed as a dual-core processor: one core is responsible for language understanding and dialogue, while the other handles video segmentation and tracking. When users input instructions, Sa2VA generates specific instruction tokens that are passed to SAM-2 for precise segmentation. This design allows both modules to leverage their respective strengths and engage in effective feedback learning, continuously improving overall performance.

The research team also designed a multi-task joint training curriculum for Sa2VA to enhance its capabilities in image and video understanding. In various public tests, Sa2VA demonstrated outstanding performance, especially in video referential segmentation tasks. It not only achieves accurate segmentation in complex real-world scenarios but also tracks target objects in videos in real-time, showcasing strong dynamic processing capabilities.

In addition, ByteDance has released multiple versions and training tools for Sa2VA, encouraging developers to conduct research and applications. This initiative provides researchers and developers in the AI field with rich resources, promoting the development of multimodal AI technology.

Project:

https://lxtgh.github.io/project/sa2va/

https://github.com/bytedance/Sa2VA

Key Points:

- 🎥 Sa2VA is a new model introduced by ByteDance, combining the advantages of LLaVA and SAM-2, achieving the understanding and segmentation of video content.

- 🔗 The model effectively connects language understanding with image segmentation through a "code" system, enhancing interactive capabilities.

- 🌍 Open resources of Sa2VA provide developers with rich tools, promoting the research and application of multimodal AI technology.