Microsoft's Security Research Team has revealed a serious privacy vulnerability called "Whisper Leak." This is a side-channel attack targeting modern AI chat services, which could allow malicious users to eavesdrop on user conversations with AI.

The core of this attack lies in the fact that it does not require breaking existing encryption protocols such as TLS. Instead, it analyzes metadata of encrypted network traffic, such as packet size, transmission timing, and sequence patterns, to infer the topics of user-AI conversations. Because AI services usually stream responses token by token to provide a smooth experience, this practice leaves unique "fingerprints" at the network level, making the attack feasible.

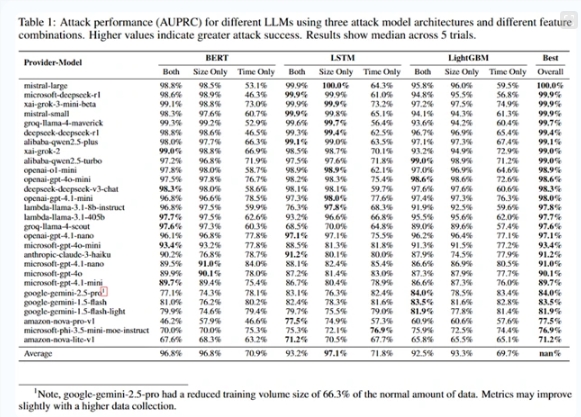

Researchers trained machine learning models and demonstrated the effectiveness of this attack by collecting large amounts of encrypted data packet traces from AI responses. Different topics of conversation produced systematic differences in metadata patterns. For example, questions involving sensitive topics like "money laundering" had distinct rhythm and size of response packets compared to ordinary daily conversations. In controlled experiments, the classifier achieved an accuracy rate of over 98% in identifying specific sensitive topics, showing its potential for high-precision monitoring in the real world.

This vulnerability poses a systemic risk to a wide range of AI chat services. Attackers, especially internet service providers (ISPs) or malicious actors on public Wi-Fi, may use "Whisper Leak" to observe users' network traffic, thereby identifying and marking sensitive conversations. This poses a serious threat to journalists, activists, and ordinary users seeking legal or medical advice. Although the conversation content itself is encrypted, the "topic" of the user's conversation may be leaked, leading to subsequent scrutiny or risks.

After Microsoft followed the responsible disclosure principle, several major AI suppliers quickly took action. Current mitigation measures mainly include: breaking the association between packet size and content length through random padding or content obfuscation; using batch processing of tokens to reduce time precision; and actively injecting virtual packets to interfere with traffic patterns. Although these measures have improved security, they also bring problems such as increased latency and higher bandwidth consumption, forcing service providers to make trade-offs between user experience and privacy protection.

For ordinary users, when handling highly sensitive information, prioritizing non-streaming response modes and avoiding queries on untrusted networks are currently effective protective measures.

Key Points:

🌐 Researchers revealed "Whisper Leak" as a new privacy vulnerability that steals AI chat content through analysis of network traffic metadata.

🔍 The attack method does not require breaking encryption protocols, with an accuracy rate exceeding 98%, allowing malicious users to potentially identify sensitive conversations.

🛡️ Several AI suppliers have taken measures, but users still need to pay attention to protecting sensitive information on untrusted networks.