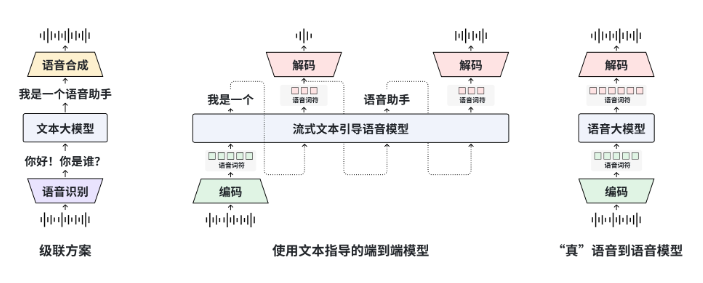

Fudan University's MOSS team has launched MOSS-Speech, the first end-to-end Speech-to-Speech dialogue system. The model is now available on Hugging Face Demo and has open-sourced weights and code. MOSS-Speech adopts a "layer splitting" architecture: it freezes the original MOSS text large model parameters and adds three new layers for speech understanding, semantic alignment, and neural vocoder, which can complete speech question answering, emotion imitation, and laughter generation in one go, without the need for a three-stage pipeline of ASR→LLM→TTS.

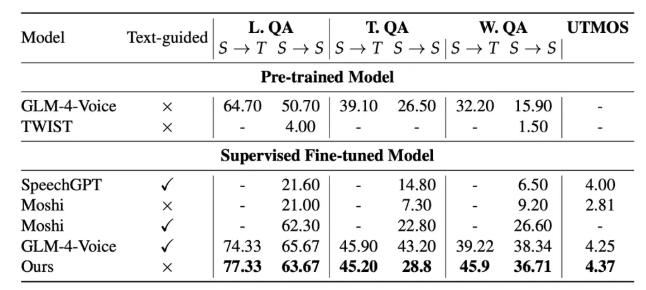

Evaluation results show that MOSS-Speech reduces WER to 4.1% in the ZeroSpeech2025 textless speech task, with an emotional recognition accuracy of 91.2%, both exceeding Meta's SpeechGPT and Google AudioLM; the subjective MOS score for Chinese spoken language tests reached 4.6, close to human recordings at 4.8. The project provides a 48kHz super-sampling version and a 16kHz lightweight version, the latter of which can perform real-time inference on a single RTX4090 with a latency of less than 300ms, suitable for mobile deployment.

The team revealed that they will soon open-source the "Speech Control" version of MOSS-Speech-Ctrl, supporting dynamic adjustment of speech speed, voice, and emotional intensity through voice commands, expected to be released in Q1 2026. MOSS-Speech has opened commercial licenses, and developers can obtain training and fine-tuning scripts via GitHub to complete private voice cloning and character voice conversion locally.