NVIDIA recently launched a new small language model, Nemotron-Nano-9B-v2, which shows excellent performance on multiple benchmarks and allows users to flexibly control the switch of its reasoning function. The parameter count of Nemotron-Nano-9B-v2 is 9 billion, significantly reduced from its predecessor's 12 billion parameters, aiming to meet deployment needs on a single NVIDIA A10 GPU.

Oleksii Kuchiaev, NVIDIA's Director of AI Model Post-Training, stated that this model is specifically optimized for the A10 GPU, achieving up to 6 times faster processing speed, suitable for various application scenarios. Nemotron-Nano-9B-v2 supports multiple languages, including English, German, Spanish, French, Italian, Japanese, as well as extended Korean, Portuguese, Russian, and Chinese, and is suitable for instruction following and code generation tasks.

The model is based on the Nemotron-H series, integrating Mamba and Transformer architectures, which can reduce memory and computational requirements when processing long sequences. Unlike traditional Transformer models, the Nemotron-H model uses selective state space models (SSM), ensuring accuracy while efficiently handling longer information sequences.

In terms of reasoning functions, Nemotron-Nano-9B-v2 can default to generating tracking records of the reasoning process. Users can also switch this feature using simple control instructions, such as /think or /no_think. In addition, the model introduces a runtime "thinking budget" management, allowing developers to set the maximum number of tokens used for reasoning, thus achieving a balance between accuracy and response speed.

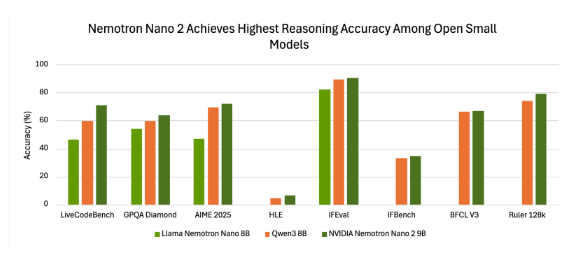

In benchmark tests, Nemotron-Nano-9B-v2 demonstrated good accuracy. For example, under the "reasoning enabled" mode of the NeMo-Skills suite, the model performed well in multiple tests, showing advantages compared to other small open-source models.

NVIDIA released Nemotron-Nano-9B-v2 under an open model license, allowing commercial use, and developers can freely create and distribute derivative models. Notably, NVIDIA does not claim ownership of the output generated by the model, giving users full control over its usage.

The release of this model aims to provide developers with tools to balance reasoning capabilities and deployment efficiency in small-scale environments, marking NVIDIA's ongoing efforts to improve the efficiency and controllable reasoning capabilities of language models.

huggingface:https://huggingface.co/nvidia/NVIDIA-Nemotron-Nano-9B-v2

Key Points:

🌟 NVIDIA has launched a new small language model, Nemotron-Nano-9B-v2, which allows users to flexibly control the reasoning function.

⚙️ The model is based on an advanced hybrid architecture, enabling efficient processing of long sequence information, suitable for multilingual tasks.

📊 Nemotron-Nano-9B-v2 is released under an open model license, allowing developers to use it for commercial purposes and create and distribute derivative models.