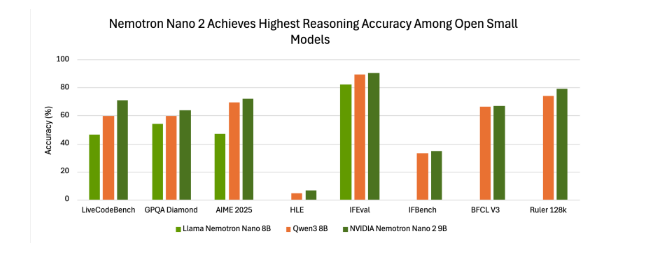

Small models are causing a storm, and NVIDIA is not lagging behind. After MIT and Google released small AI models that can run on smartwatches and smartphones, NVIDIA has launched its latest small language model (SLM)—Nemotron-Nano-9B-V2. The model performs well in multiple benchmark tests and achieved the highest level among similar products in specific tests.

Designed for Efficiency and Reasoning

The Nemotron-Nano-9B-V2 has 9 billion parameters. Although it is larger than some micro models with millions of parameters, it is significantly smaller than its previous 12 billion parameter version and is specifically optimized for a single NVIDIA A10 GPU. Oleksii Kuchiaev, Director of AI Model Post-Training at NVIDIA, explained that this adjustment was made to adapt to the popular A10 deployment GPU. In addition, the Nemotron-Nano-9B-V2 is a hybrid model, capable of processing larger batches and running six times faster than Transformer models of the same scale.

The model supports up to nine languages, including Chinese, English, German, French, Japanese, and Korean, and excels at handling instruction tracking and code generation tasks. Its pre-training dataset and the model itself are available on Hugging Face and NVIDIA's model catalog.

Combining Transformer and Mamba Architectures

The Nemotron-Nano-9B-V2 is based on the Nemotron-H series, which combines Mamba and Transformer architectures. Traditional Transformer models, although powerful, consume a lot of memory and computational resources when processing long sequences. The Mamba architecture introduces selective state space models (SSMs), which can process long information sequences with linear complexity, offering advantages in terms of memory and computational cost. The Nemotron-H series achieves a 2-3 times throughput improvement in long context processing by replacing most attention layers with linear state space layers, while maintaining high accuracy.

Unique Reasoning Control Features

A major innovation of this model is its built-in "reasoning" feature, which allows users to perform self-checks before the model provides the final answer. Users can enable or disable this feature using simple control tokens, such as /think or /no_think. The model also supports runtime "thinking budget" management, allowing developers to limit the number of tokens used for internal reasoning, thus achieving a balance between accuracy and latency. This is particularly crucial for application scenarios like customer support or autonomous agents that require fast response times.

Strict Open Licensing, Targeted at Enterprise Applications

NVIDIA released the Nemotron-Nano-9B-V2 under its Open Model License Agreement, which is enterprise-friendly and highly permissive. NVIDIA clearly states that enterprises can freely use the model for commercial purposes and do not have to pay any fees or royalties for using the model.