Google DeepMind has conducted its first study on the most common malicious applications of AI, revealing that AI-generated "Deepfakes" of false politicians and celebrities are more prevalent than attempts to use AI for cyberattacks. This research, a collaboration between Google's AI division DeepMind and its subsidiary research unit Jigsaw, aims to quantify the risks of generative AI tools, which have been pushed into the market by the world's largest tech companies seeking substantial profits.

Motivations of Malicious Actors Involving Technology

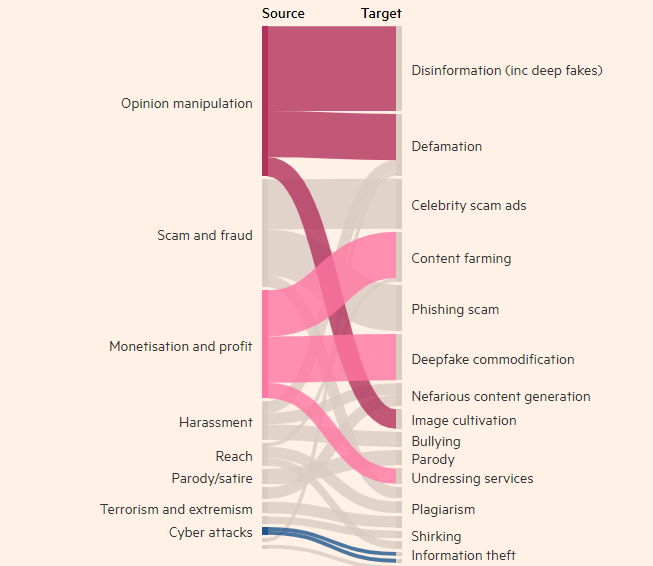

The study found that creating realistic but false images, videos, and audio of individuals is nearly the most frequent abuse of generative AI tools, nearly twice as common as other high-ranking methods such as using text tools (like chatbots) to fabricate information. The most common target of abusing generative AI is to influence public opinion, accounting for 27% of the usage cases, raising concerns about how Deepfakes could impact global elections this year.

In recent months, Deepfakes of UK Prime Minister Rishi Sunak and other global leaders have appeared on TikTok, X, and Instagram. UK voters will cast their ballots in the general election next week. Despite social media platforms' efforts to flag or remove such content, people may not recognize it as fake, and its spread could influence voter decisions. DeepMind researchers analyzed around 200 cases of abuse, involving social media platforms X and Reddit, as well as online blogs and media reports on abusive behavior.

The study found that the second largest motivation for abusing AI-generated products (such as OpenAI's ChatGPT and Google's Gemini) is profit, whether through offering services to create Deepfakes or using generative AI to create large amounts of content, such as fake news articles. The majority of abuses use readily available tools that "require minimal technical expertise," meaning more malicious actors can exploit generative AI.

DeepMind's research will influence its efforts to improve the safety of its assessment models and hopes to also impact how its competitors and other stakeholders view "manifestations of harm."

Key Points:

- 👉 DeepMind's research identifies Deepfakes as the leading issue in the abuse of AI applications.

- 👉 The most common purpose of abusing generative AI tools is to influence public opinion, accounting for 27% of usage cases.

- 👉 The second largest motivation for abusing generative AI is profit, primarily through offering Deepfakes services and creating fake news.