On June 6th, the Seed team of ByteDance officially released the image editing model SeedEdit3.0. This new version of the image editing model has made significant progress in aspects such as maintaining the subject of the image, handling background details, and following instructions, greatly improving the usability and efficiency of image editing.

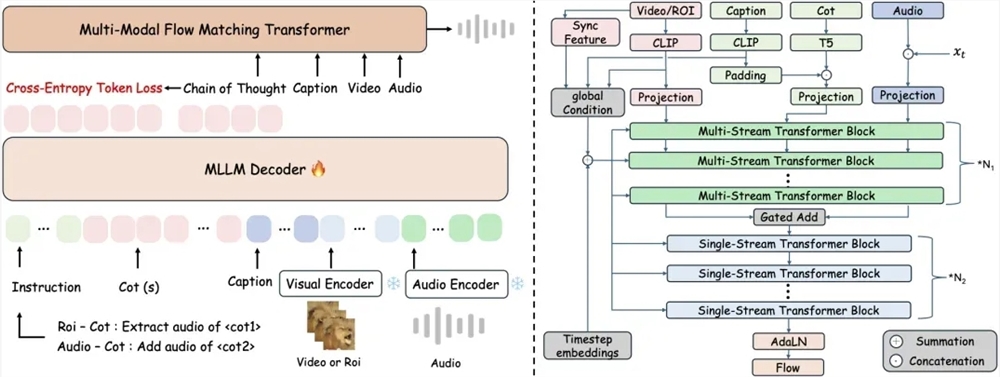

SeedEdit3.0 is developed based on the text-to-image model Seedream3.0. By introducing diverse data fusion methods and specific reward models, it addresses the shortcomings of previous image editing models in terms of maintaining the subject and background, and following instructions. The model can process and generate images with a resolution of 4K, performing exceptionally well in fine processing of the edited areas and high-fidelity retention of non-edited areas. Particularly in complex scenarios such as portrait editing, background changes, and perspective and light transformations, SeedEdit3.0 demonstrates excellent capabilities.

For example, in the task of removing unwanted pedestrians from an image, SeedEdit3.0 not only accurately identifies and removes irrelevant people but also removes their shadows, showcasing its powerful ability to handle details. In converting 2D illustrations into real-life models, the model effectively retains details like clothing, accessories, and handbags, generating images with a street-style fashion sense. Additionally, SeedEdit3.0 can handle complex lighting transformations, properly preserving details from nearby houses to distant water ripples, and adjusting pixel-level rendering according to changes in light.

To achieve these capabilities, the Seed team proposed an efficient data fusion strategy during development and constructed various specialized reward models. By jointly training these reward models with diffusion models, the team specifically improved the editing quality of key tasks such as face alignment and text rendering. At the same time, SeedEdit3.0 has been optimized for inference acceleration, enabling it to achieve rapid inference within 10 seconds.

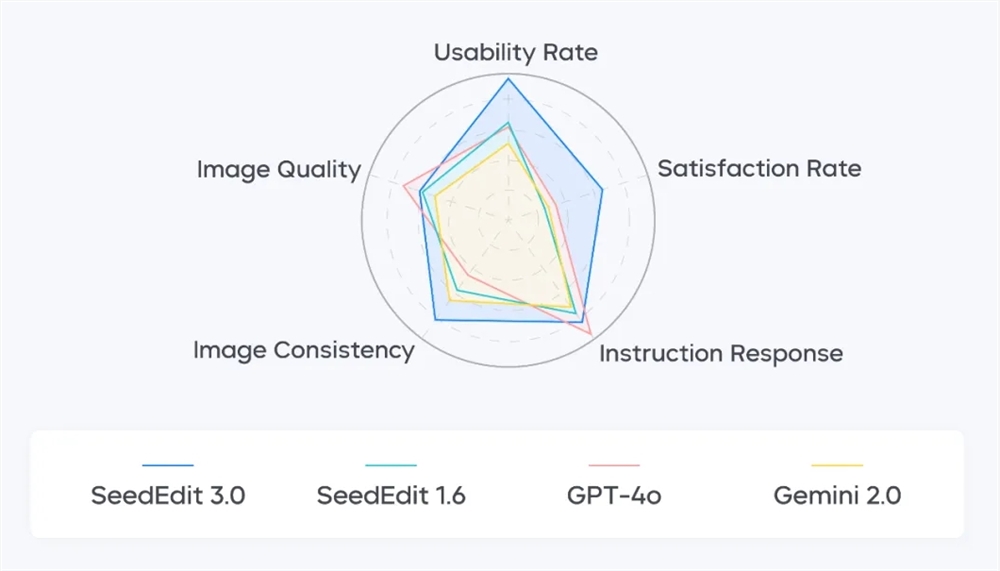

In evaluating the performance of SeedEdit3.0, the team collected hundreds of real and synthetic test images and built 23 categories of editing operation subtasks, covering common operations such as stylization, addition, replacement, deletion, as well as command-driven actions like camera movement, object displacement, and scene transitions. Machine evaluation results show that SeedEdit3.0 leads previous versions and other similar models in terms of editing retention effects and instruction response capabilities. Real-person evaluations also indicate that SeedEdit3.0's image retention capability stands out most prominently, with an availability rate reaching 56.1%, a significant improvement over previous versions.

The release of SeedEdit3.0 marks another important advancement in image editing technology in the AI field. The model not only achieves multiple technical innovations but also demonstrates high practicality and efficiency in real-world applications. Currently, the technical report of SeedEdit3.0 has been made public, and the model has begun testing on the Imdream web platform. The DouBao app will also be launched soon. Users can experience this powerful image editing tool by uploading reference images and inputting modification prompts.

Project homepage:

https://seed.bytedance.com/seededit

Technical report:

https://arxiv.org/pdf/2506.05083

Experience entry:

Imdream web platform - Image generation - Upload reference image - Select 3.0 model - Input modification prompt (gray-scale testing);

DouBao app - AI image generation - Add reference image - Input modification prompt (coming soon).