Large language models (LLMs) have made significant progress in complex reasoning tasks by combining task prompts with large-scale reinforcement learning (RL), such as the Deepseek-R1-Zero model, which directly applies reinforcement learning to base models, demonstrating strong reasoning capabilities. However, this success is difficult to replicate across different base model series, especially for the Llama series. This raises a core question: what factors lead to inconsistent performance of different base models during reinforcement learning?

Extension Limitations of Reinforcement Learning on Llama Models

Models such as OpenAI's o1, o3, and DeepSeek's R1 have achieved breakthroughs in competition-level math problems through large-scale reinforcement learning, driving exploration of the reinforcement learning capabilities of small models below 1 trillion parameters. However, these advances are mostly limited to the Qwen model series and are difficult to reproduce on models like Llama. The lack of transparency in the pre-training process makes it challenging to understand how pre-training affects the scalability of reinforcement learning. Some non-traditional studies found that one-time prompts can improve Qwen's reasoning ability, but they have little effect on Llama. Although projects such as OpenWebMath and MathPile aim to compile high-quality mathematical pre-training corpora, their scale remains limited to less than 1 trillion tokens.

Exploring Stable Decay Strategies During Training

Researchers from Shanghai Jiao Tong University conducted an in-depth study on the impact of mid-training strategies on the dynamics of reinforcement learning, using Qwen and Llama as research subjects, and drew the following insights:

First, high-quality math corpora such as MegaMath-Web-Pro can simultaneously enhance the performance of base models and reinforcement learning. Second, using question-and-answer data, especially data containing long Chain-of-Thought (CoT) reasoning, can further enhance the effectiveness of reinforcement learning. Third, long CoT introduces lengthiness and instability during reinforcement learning training. Finally, applying expansion during mid-training can improve the performance of downstream reinforcement learning.

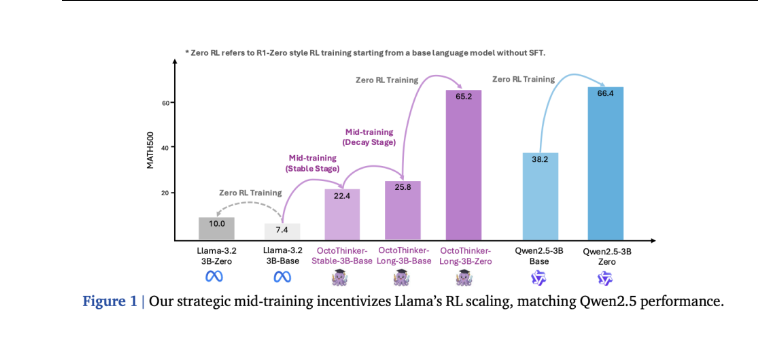

The researchers proposed a two-phase mid-training strategy called "Stable-Decay": first, train the base model using 200 billion tokens, then train three CoT-centered branches using 20 billion tokens. Ultimately, this strategy successfully generated the OctoThinker model, which has strong reinforcement learning compatibility.

RL Configuration and Benchmark Evaluation

Researchers used the MATH8K dataset for reinforcement learning (RL) training prompts, with configurations including a global training batch size of 128, 16 rollout responses per query, and a PPO minimum batch size of 64. Experiments were conducted on the Llama-3.2-3B-Base and Qwen2.5-3B-Base models. In the evaluation, base language models used few-shot prompts, while the reinforcement learning-optimized models used zero-shot prompts on benchmark tasks such as GSM8K, MATH500, OlympiadBench, and AMC23.

During reinforcement learning training, the response length of the Qwen model continuously increased and remained within a reasonable range, while the Llama model exhibited abnormal behavior, with an average response length soaring to 4,096 tokens. Evaluation results further indicate that the Qwen2.5-3B model optimized through reinforcement learning showed improvements across all benchmarks, whereas the improvements in the Llama-3.2-3B model were minimal.

OctoThinker Outperforms Llama in RL Compatibility

In 13 mathematical benchmarks, each OctoThinker branch outperformed the original Llama base model by 10%-20% and achieved consistent improvements across all stable phase models. The OctoThinker-Zero series demonstrated diverse thinking behaviors during reinforcement learning expansion, with the OctoThinker-Long variant performing exceptionally well. When comparing three 3B-scale base models during reinforcement learning training, the OctoThinker-Long-3B outperformed the original Llama-3.2-3B model and reached a performance level close to the Qwen2.5-3B model, which is known for its strong reasoning capabilities and extensive pre-training. The performance of mixed branches and short branches was slightly lower, especially in more challenging benchmarks.

Conclusion and Future Work: Toward RL-Ready Base Models

This study thoroughly explored the reasons behind the differences in behavior of base models such as Llama and Qwen during the reinforcement learning reasoning process and emphasized the importance of mid-training for the scalability of reinforcement learning. The two-phase mid-training strategy successfully transformed Llama into a more suitable base model for reinforcement learning, ultimately resulting in the OctoThinker model.