On July 4, 2025, Kuaishou continued to open-source the second generation of reward models, the Skywork-Reward-V2 series. This series includes 8 reward models based on different foundation models, with parameter sizes ranging from 600 million to 8 billion. Upon release, it achieved top rankings across seven major reward model evaluation benchmarks, becoming a focal point in the open-source reward model field.

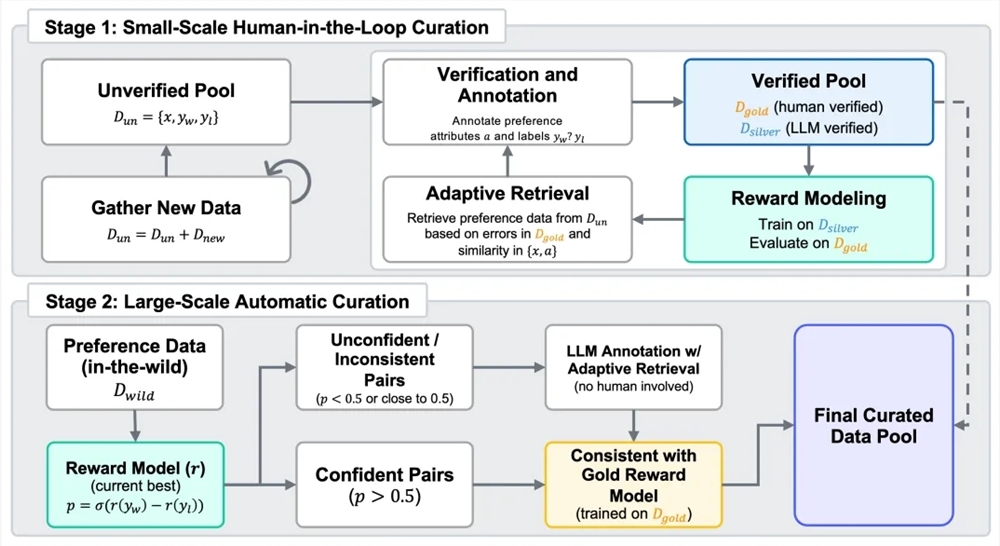

Reinforcement learning from human feedback (RLHF) relies heavily on reward models. To develop the next generation of reward models, Kuaishou created a hybrid dataset called Skywork-SynPref-40M, containing 40 million pairs of preference comparisons. In data processing, the team adopted a two-stage human-machine collaborative process, combining high-quality manual annotations with the large-scale processing capabilities of models. In the first stage, an initial unverified preference pool was built, and large language models were used to generate auxiliary attributes. Then, human annotators conducted meticulous reviews of some data according to strict protocols, external tools, and large language models, building a small-scale, high-quality "gold standard" dataset. Subsequently, guided by the gold standard data preferences, the team combined large language models to generate large-scale, high-quality "silver standard" data, and iteratively optimized multiple times. In the second stage, the focus shifted to automated large-scale data expansion, using the trained reward model to perform consistency filtering, reducing the burden of manual annotation while achieving a balance between the scale and quality of preference data.

The Skywork-Reward-V2 series, developed based on high-quality mixed preference data, demonstrates broad applicability and excellent capabilities. It covers multiple dimensions such as general alignment with human preferences, objective correctness, safety, resistance to style bias, and best-of-N scalability. It has achieved state-of-the-art (SOTA) levels across seven mainstream reward model evaluation benchmarks, including Reward Bench v1/v2, PPE Preference & Correctness, RMB, RM-Bench, and JudgeBench. Even the smallest model, Skywork-Reward-V2-Qwen3-0.6B, achieves nearly the average performance of the previous generation's strongest model. The Skywork-Reward-V2-Qwen3-1.7B model even surpasses the current SOTA in open-source reward models. The largest model, Skywork-Reward-V2-Llama-3.1-8B, outperforms all mainstream benchmarks, becoming the best-performing open-source reward model currently available.

This series of models also demonstrates extensive coverage of multi-dimensional human preferences. It outperforms multiple larger-parameter models and the latest generative reward models on general preference evaluation benchmarks. In terms of objective correctness evaluation, it performs exceptionally well in knowledge-intensive tasks. In various advanced capability evaluations, including Best-of-N tasks, bias resistance testing, complex instruction understanding, and truthfulness judgment, it has achieved leading results, demonstrating excellent generalization ability and practicality.

Additionally, the high scalability of the data screening process significantly enhances the performance of reward models. Preference data that has been finely filtered and screened can continuously improve the overall performance of the model through multiple rounds of iterative training, especially showing significant results in the second stage of fully automatic data expansion. Early version experiments showed that training an 8B-scale model with only 1.8% of high-quality data exceeded the performance of the current 70B-level SOTA reward model, proving the advantages of the Skywork-SynPref dataset in both scale and quality.

HuggingFace address:

https://huggingface.co/collections/Skywork/skywork-reward-v2-685cc86ce5d9c9e4be500c84

GitHub address:

https://github.com/SkyworkAI/Skywork-Reward-V2